The next generation of audio

Surroundscapes: the power of immersive sound

Geoff Taylor, CEO, the BPI: “Cutting-edge new tech, such as immersive audio used in VR and other applications, give us a glimpse of how this exciting new world of consumption and entertainment will take shape.”

In the early days of silent film, music was performed live. There was a band or pianist that would play in front of the screen, serving as commentary for the narrative’s action and flow. In 1927, music and sound were recorded and printed onto film for the first time. Slowly, as film technology became more sophisticated, it encompassed multiple channels of sound, with a number of speakers placed around the theatre. Eventually, surround sound was developed, and became a critical factor in the success of the overall cinematic experience.

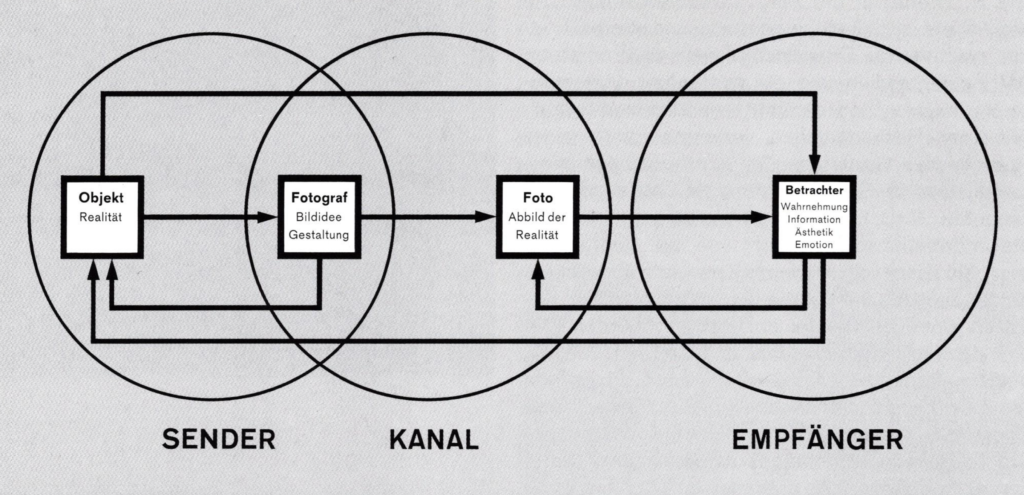

Immersive content is no different. If one thinks of virtual reality as an attempt to create an alternative reality, the brain needs audio cues to match the visuals to be able to buy into the illusion. The user has to feel present in the experience, and can only feel present if all the cues received are completely natural. If the sound is flat and coming from just one place, the spell will be broken.

When delivered successfully, immersive audio creates the sensation of height around the user, transporting into a more thrilling experience. Because the power of sound can alert users to something behind or above, it’s important users realise that they are able to move around within the immersive experiences. When creating virtual reality (VR) and augmented reality (AR) experiences, for a long time, the industry has been focusing on the visuals, but that is only part of the environment.

This year, at SXSW, Tribeca and the Venice Film Festival there was a noticeable rise in sound-led immersive experiences: sound has become a powerful storytelling tool.

Last year, Bose launched BoseAR and with it three products to transform AR audio. Launched alongside

these products, the software to create AR content is now available, with the world’s first audio AR platform: Traverse.

At SXSW, Traverse’s “From Elvis in Memphis”, an AR-based piece of content allowed users to experience the music of Elvis Presley by walking through a physical space. The experience is created in such a way that it’s like being in the studio with Elvis; it’s possible to walk right up to him and his band members.

In the UK, Abbey Road Studios is one of the most famous recording studios in the world. It has been in use since 1931, and has famously provided recording facilities for talents such as The Beatles, Pink Floyd and Aretha Franklin. Abbey Road is the only facility in the UK to offer both scoring and film sound post-production, while the focus on immersive technology grows year on year.

Our research has identified a number of companies in the UK which are creating sound-based tools and solutions. There are even more creating sound-led immersive experiences. Two companies from our CreativeXR programme this year are doing just that: Darkfield and Abandon Normal Devices. On last year’s programme, Roomsize developed Open Space: a platform that enables the rapid construction of interactive audio experiences that occupy physical spaces. All this activity suggests that we are on the brink of a new generation of infrastructure

to amplify sound in VR, AR and MR. Sound-led content will simultaneously open up new streams of possibilities for entertainment and media.

In partnership with the British Phonographic Industry (BPI) and its Innovation Hub, we are delighted to introduce Surroundscapes: The power of immersive sound. This is the latest Digital Catapult immersive showcase which runs from July to October 2019. We will be shining a light on UK-based startups and scaleups that are either creating the latest solutions to amplify VR, AR and MR experiences with sound, or content creators who are specifically sound-led.

Surroundscapes: showcase

After a competitive selection process, Digital Catapult and the BPI welcome six of the most innovative immersive sound companies in the UK:

1.618 Digital: is an award-winning creative sound design studio that provides audio production and post-production services; immersive and spatial audio solutions for 360 video content; and games and interactive VR/AR media.

As part of this showcase, 1.618 Digital is proud to present three projects that are on the cutting edge of digital technology for modern education and storytelling and illustrate the innovative applications of immersive audio. Immersive environments allow interactions and user manipulation of objects and sounds, which has been proven to provide 40% more brain activity relating to storage and recall of information. The use of high spatial resolution audio and interactivity along with volumetric audio and 6DOF enables users to engage with stories and other content on a deeper level.

Darkfield: specialises in creating communal location-based immersive experiences inside shipping containers. These experiences place the audience in complete darkness and then deliver binaural 3D audio and other sensory elements, using the darkness to create a canvas for the imagination.

The company has a unique offer grown from over twenty years working in the immersive theatre industry, and over six years creating shows and experiences in complete darkness that use binaural audio, multi-sensory effects and content to place the audience in the centre of evolving narratives. The experiences’ greatest asset is the invitation to walk the line between what seems to be happening and what imaginations can conjure up.

MagicBeans: is a spatial audio company creating a new kind of AR audio. The company maps highly realistic audio ‘holograms’ to real-world locations, visual displays and moving objects, creating a new and emotive presence for experiential businesses and visitor attractions.

The experiences demonstrate how sound can be mapped to visual displays, to individual objects that can be picked up and interacted with, and to a full room-scale audio experience. Experience MagicBeans technology embedded in a next-generation silent disco, an immersive theatre production and a new kind of audio-visual display

PlayLines: is an immersive AR studio that specialises in creating narrative-led immersive AR experiences in iconic venues. Its productions combine cutting-edge location-based AR technology with game design and immersive theatre techniques. The team’s work has been described as “Punchdrunk Theatre meets Pokemon Go”.

CONSEQUENCES is a groundbreaking immersive interactive audio-AR grime rap opera, created in collaboration with multi-award-winning MC Harry Shotta. Explore the AR Grime Club, follow the rhymes, and choose the ending. CONSEQUENCES gives audiences a brand new kind of night out that combines Secret Cinema, silent disco and ‘Sleep No More’.

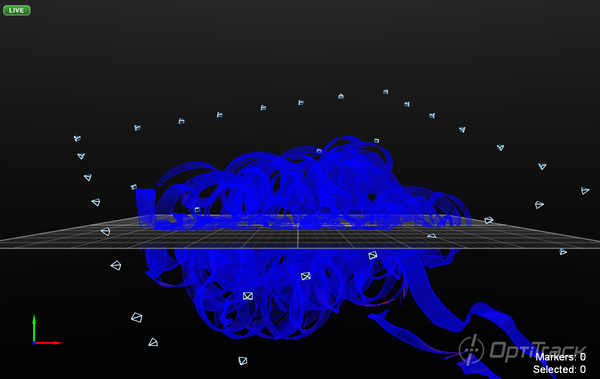

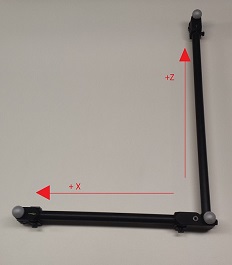

Volta: is a new way to produce music and audio, using space and movement as both a medium and an output. It is a VR application that makes spatial audio production not just easy but expressive, like a musical instrument, and easily integrates with audio production software.

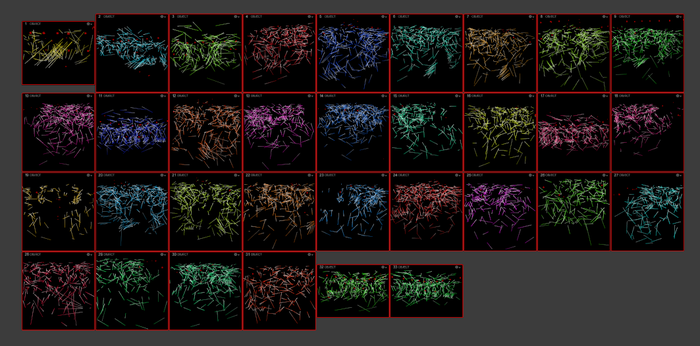

Volta achieves integration by retaining the visual and interactive elements of producing spatial audio within the platform, while keeping all audio signal processing in the producer’s or engineer’s audio production application of choice. It uses a robust communication channel that allows the user to physically grab objects, move them in space, and record and automate that motion.

ZoneMe: ZONEME’s TRUE2LIFE™ object-based sound system provides a new way to control how audiences hear things by placing the sound at the point of origination. For example, words can appear to come from an actor’s mouth, not the speakers, or a gunshot can be made to sound as if it takes place outside the room.

ZONEME aims to put the ‘reality’ into VR/AR/MR experiences by providing TRUE2LIFE™ sound. These experiences can be up to seven times more stimulating than visuals. Yet visuals have seen huge technological advances over the last 30 years that have not been matched by similar developments in audio.

Naima Camara Thursday 04 July 2019