Interview with a high school teacher

In my previous blog entry (“05 // Online interaction scenario: Experience Map”) I wrote about the protopersona Sophie which is based on a real person, her experiences mixed with my observation. In order to widen my perspective and not only examining the students/university view I am really happy to had the chance to interview Damaris about her online teaching experiences a german high school teacher.

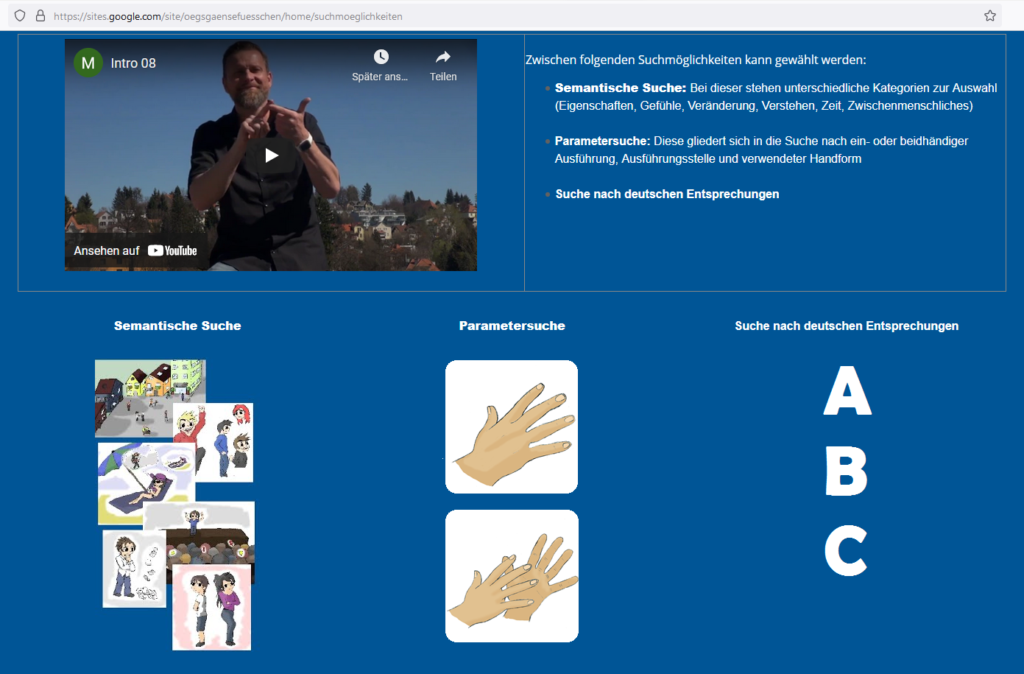

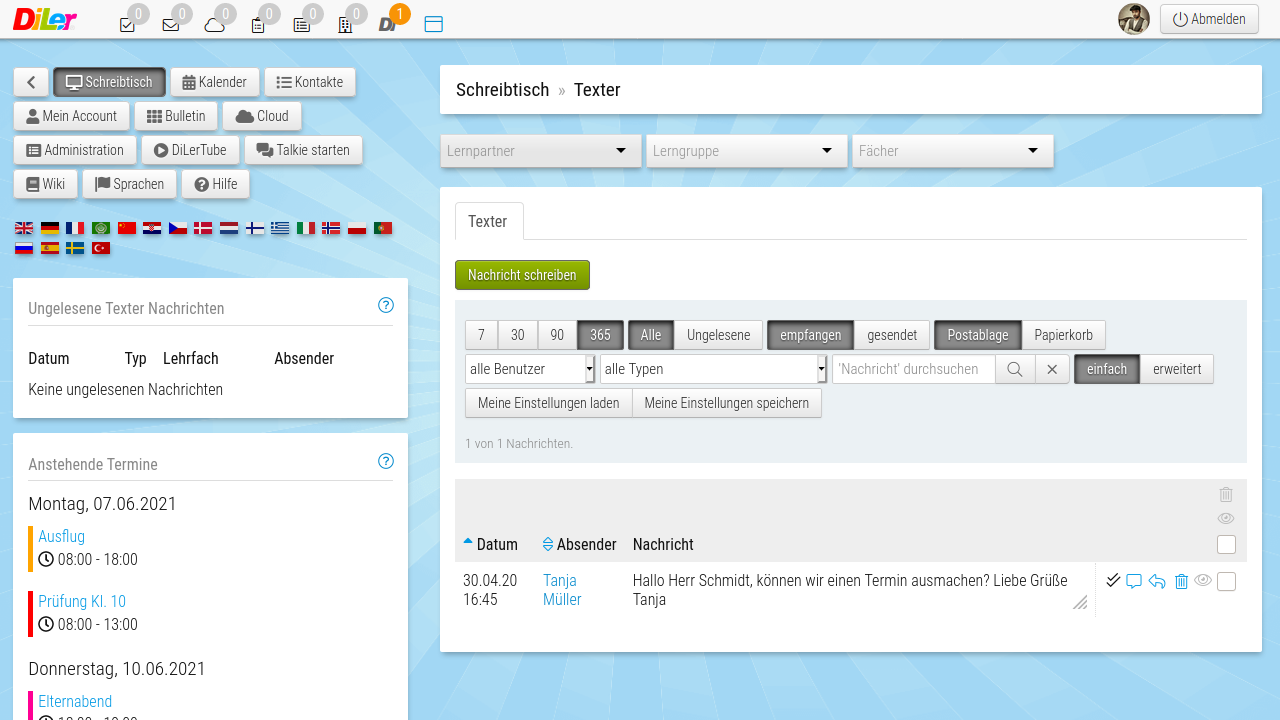

The first question I asked was how she experienced the transition from presence to online lessons. She described that teaching in the first lockdown (around march 2020) was really hard because of the missing software and also missing equipment of teachers and students. Teachers mostly had to hand out printed homework packages and wait for them to be handed in later on. These experiences lead to a better preparation for the second lockdown (december 2020 – may 2021). The school then provided tablet for teachers and rental laptop for students without a device. They could held their lesson via video conferences in the software ‘Jitsi’ and used the platform ‘DiLer’ (= Digitale Lernplattform) for communication and data exchange. Teachers took their time to learn the software by themselves, then teach the students and let them practice the tool to handle it properly. The teachers also learned how to use a visualiser which is a document camera for digitally recording printed media.

Next I asked her if she had to change something of her presence lesson curriculum for the online teaching. Damaris told me, that she had to digitize most of her teaching material in the first place to make online teaching possible. This included not only scanning printed material but also rework existing material to make it suitable. Furthermore she had to reorganise some exercises because group or partner work were difficult to implement in the first lockdown (without online support) but also in the online environment. The communication with students and parents changed from mainly verbal to mostly written communication which took a lot of time.

I also asked Damaris, if online teaching made something easier or harder for her as a teacher. She answered that sadly there was nothing that online lessons made teaching for her easy. She explained that it was really hard for her to get the control back she needs as an educator. She didn’t know if the student actually work and couldn’t properly evaluate their performances – especially things like oral grades. Her own workload was extended due to the fact that she had to check each student’s (home)work instead of just discussing results orally in the classroom.

In regard of the class Damaris observed that the online lesson environment worked well for students who already have been very structured and good in the presence lessons. The ones who need more attention from teachers in presence lessons were mostly even more behind in the online teaching environment because they couldn’t handle their self-management. One really interesting fact was that one of the students who was a rather quiet person in presence class started to become more outgoing in the online lectures. Maybe the online environment gave this person kind of a ‘safe place’ to express herself.

The fifth question I asked was if Damaris could imagine a continuation of online lessons or parts of it in the corona-free future. She answered that online teaching/communication could have some advantages in the future. One examples she mentioned was the advantage for getting more easily in touch with parents and having the opportunity to provide parents consultations late in the evening. She also observed that the students were able to develop a lot of new media literacy through the online lessons, which should definitely be encouraged in the future.

I was really surprised when she told me that the teachers only had a software introduction of online teaching but no coaching for the didactic part of it like for example how to compensate/replace group work. The digitization of high school lessons during the pandemic and also in general times seems to me a bit neglected by the government and lies in the responsibility of the educators.

By researching about the software ‘DiLer’ I came across the article “5 Fragen – 5 Antworten” with Mirko Sigloch on the platform ‘wissensschule.de’. In this article the authors explains the approach of his school to cope with digitizing of/and education now and in the future. He is sure that the current way of teaching will be insufficient to prepare the students for complex problems in the future. By developing the platform DiLer he and his colleagues wanted to create an open source platform that combines good usability and flexibility for an ideal online school environment. After their launch and testing phase they recognized how many school have been in need for such a platform. They presented the software to the ministry of culture of the federal state Baden-Württemberg but they wanted to hold on to the old structures. In the course of the article, he finally gets very emotional about the current status of digitalisation in school that seems to be rather regressive. His call for a hybrid teaching structure makes sense from my point of view when reading, but I am sure that the advantages of the present teaching structure should not be neglected. This discussion definitely needs more research from my side and I don’t see myself in the responsibility to take a position in it (but I am still curious about the different voices about this boundary topic). I already had a quick look into the theses of Lisa Rosa which I want to examine in another blog post.

Links

https://www.digitale-lernumgebung.de/

https://www.wissensschule.de/5-fragen-5-antworten-schule_digital-mit-mirko-sigloch/