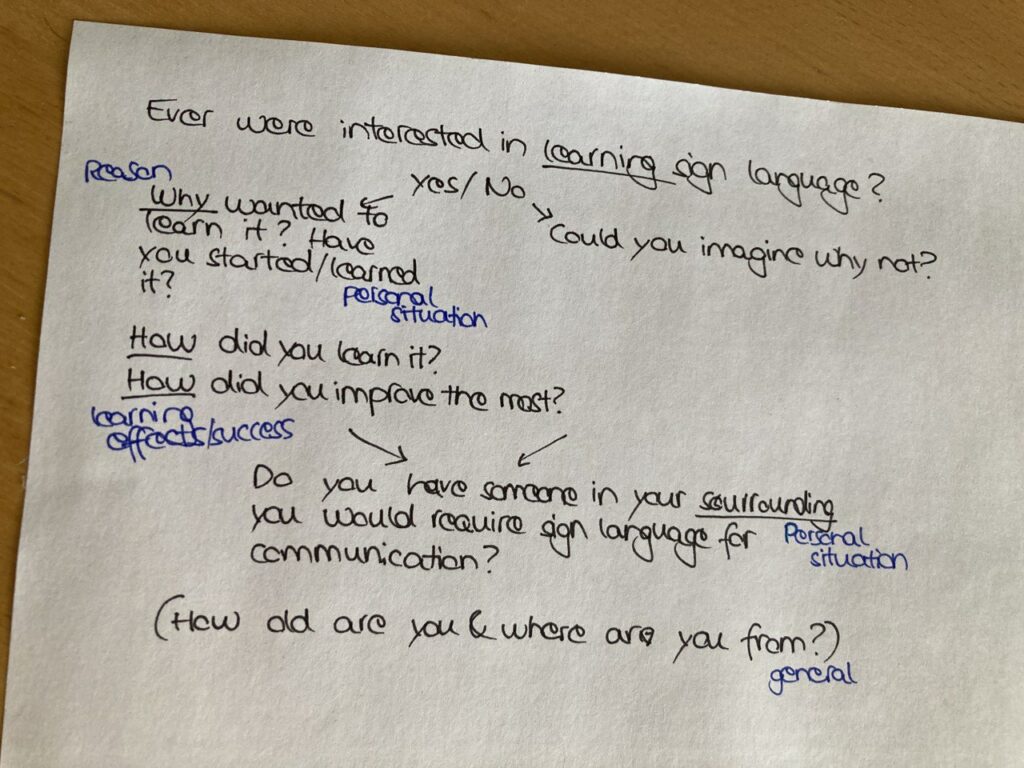

As part of the methodology, I held an interview with a student who is studying sign language in Austria for three years to become a interpreter. The 21-year-old female became interested in sign language six years ago. After watching videos via YouTube from deaf persons signing various vocabulary, she realized she wanted to learn the language even though there was no one in here nearer surroundings who was hard-hearing or deaf. She was especially fascinated by the movements which looked in her eyes like an artistic dance, the variety of the language by facial expression and posture and that it was so incredibly different from the vocal one she knew. To become very good at signing, she attended a course at Urania in Graz. As time went on the course came to an end but she practiced further on with a private teacher so that she finally could take part in the selection process to study sign language in her bachelors which she is doing now for six semesters.

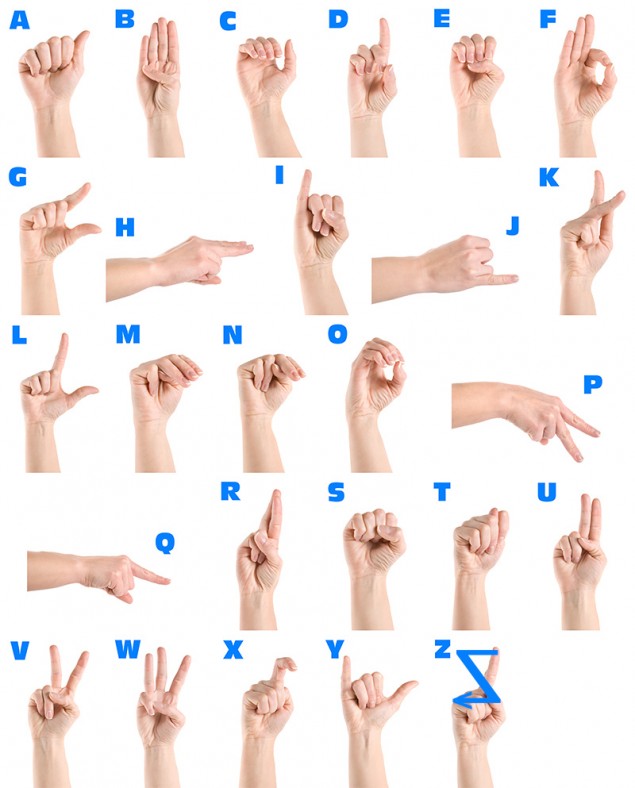

Very interesting was how she described the own learning process from her studies. In the beginning of her beginner course, she had to write down the vocabulary which the lecturer was signing to learn them later at home. First of all she did not know what to focus on and which movements are more common than others so she just wrote down the movements as she thought she could later understand and how she has seen like “left hand does this and the right one does that while the thumb of the left one does this …“ but could not remember the exact movements at home. As some of the lecturers do not provide the students with prerecorded videos of the vocabularies, the students must record them themselves to learn them. After some time, she learned that there are a few ways to transcribe the gestures more comprehensible. By classifying the movements by dividing the movement into components with answering questions like “in which direction do the palms point?”, “is the movement of the hands symmetrical?”, “which form has the hand?”, “what do the fingers do?”, “are the shoulders involved in the movement?” and more.

In the beginning the students vocally spoke out the words they were signing and structured the sentences in the vocal language grammar approach. After some time they stopped speaking next to the signing and started to structure the signs in the correct sign language grammar way. But she also knows that the current study beginners do not vocalize the signs anymore but do it right away without it and structure sentences correctly as well from the beginning.

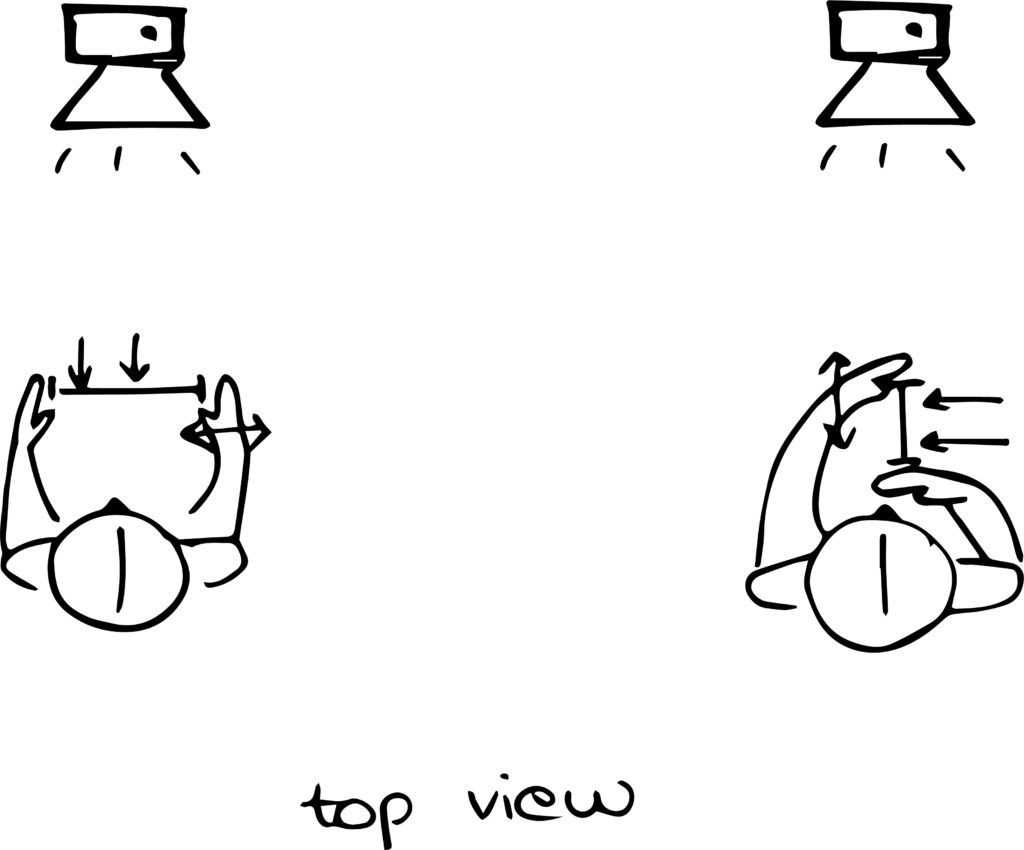

She explained that when she must do homework or tasks for her studies, the students record themselves while gesturing sentences and vocabulary. To catch every detail, they have to set up the camera in a proper way that their whole upper body is visible. If there are movements outside the visible area they either have to turn themselves to enable a view from the side or turn the view of the camera itself. The lecturers who provide their students videos record themselves from the front and if needed from the side as well when a part of the movement is hidden between or behind hands or other parts of the body.

One of the lecturers once advised her after the examination of one of her tasks that she should show the sign for timeline from the other perspective as when she tried to explain when exactly in the course of the day she has eaten or taken a break, it was not possible to see the space between the hands which showed the timeline as she had them hidden behind the hands. This showed, that it is also possible to change up the sign itself and to show the sign from the other side.

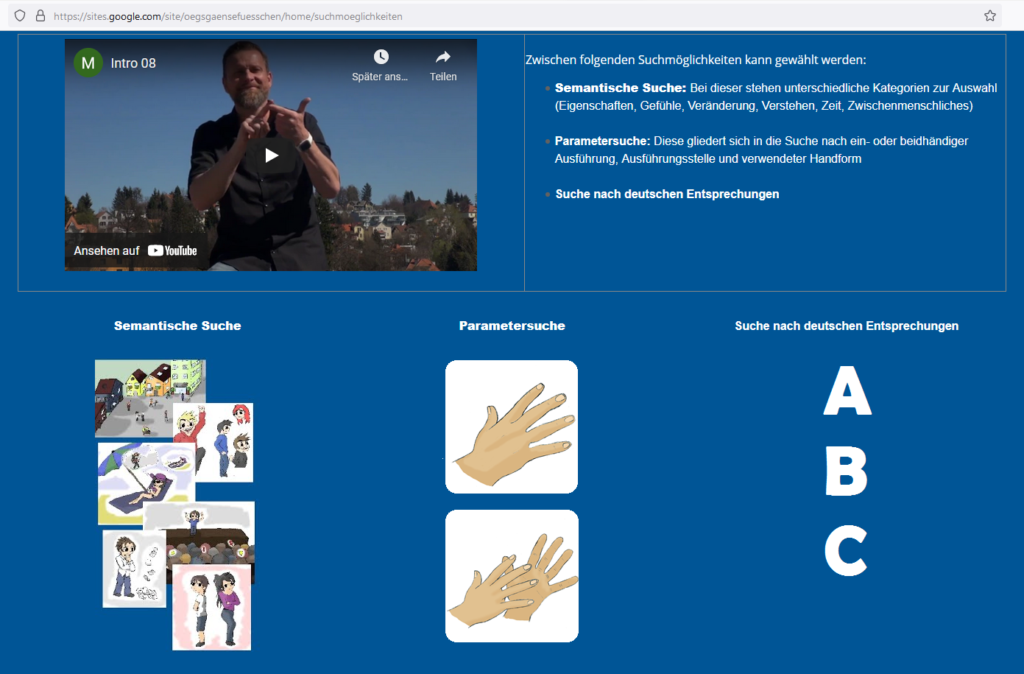

For her it is common to borrow out and search for vocabulary with the help of physical dictionaries which are mainly consisting of verbatim descriptions, some of the digital comprise fotographs as well videos. A few examples are LedaSila https://ledasila.aau.at/, spreadthesign https://www.spreadthesign.com/de.at/search/, signdict https://signdict.org/about and more. If you want to search by giving parameters of the movements because there is no specific word or sentence to describe the vocabulary or meaning, the only help you will get for searching for these “special gestures” (“Spezialgebärden” like for example “not knowing how to start”) is over ögs Gänsefüßchen https://sites.google.com/site/oegsgaensefuesschen/ as it is the only side offering the possibility to search by descriptions of movements or gestures. Ögs states that “special gestures” are a category that does not exist from a linguistic point of view. These signs are described and highlighted as “untranslatable”.

Difficulties she recognized throughout the time are that there are so many dialects even throughout Austria itself and that signs in general can change quickly so that when she communicates with deaf in her age or visits a retirement home and signs with elderly people it is a completely different experience as the signs differ. Another point she mentioned is the lack of teaching materials as it is not easy to get material and self-study is rather difficult in terms of sign language. A study colleague of her quit studying sign language after one year because he could not manage to imitate the movements and it was not possible for him to train the spatial imagination for that. She stated that some lecturers describe the experience of online-teaching for study-beginners in the first semesters currently in corona times very difficult as it is only a two-dimensional experience.

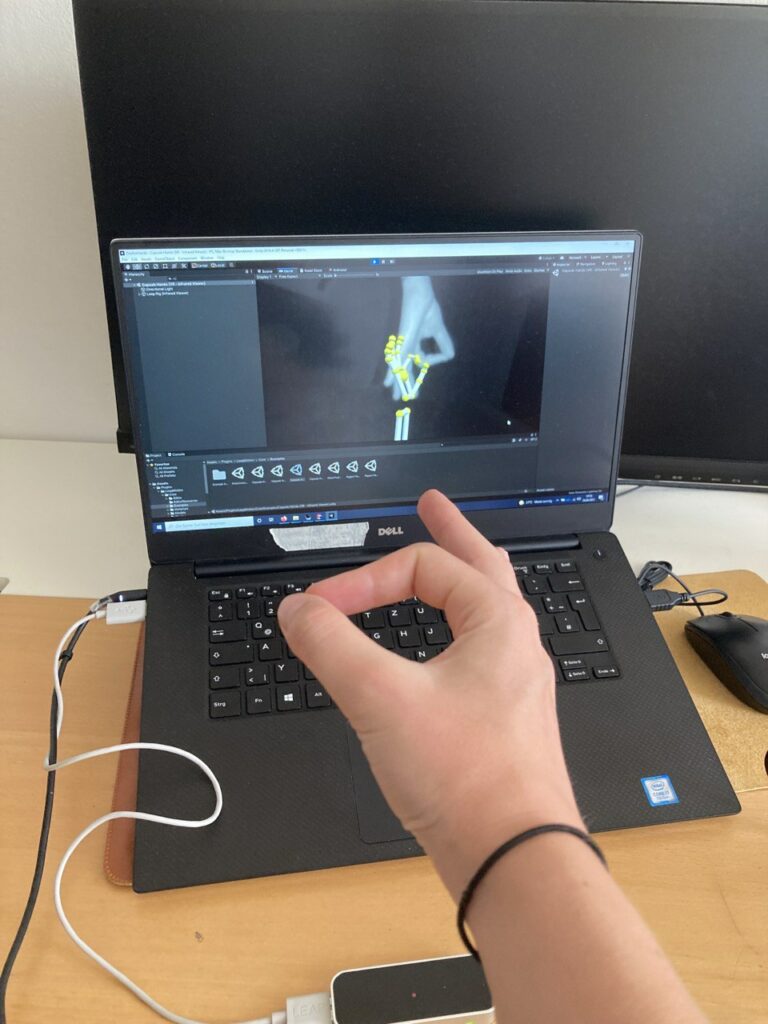

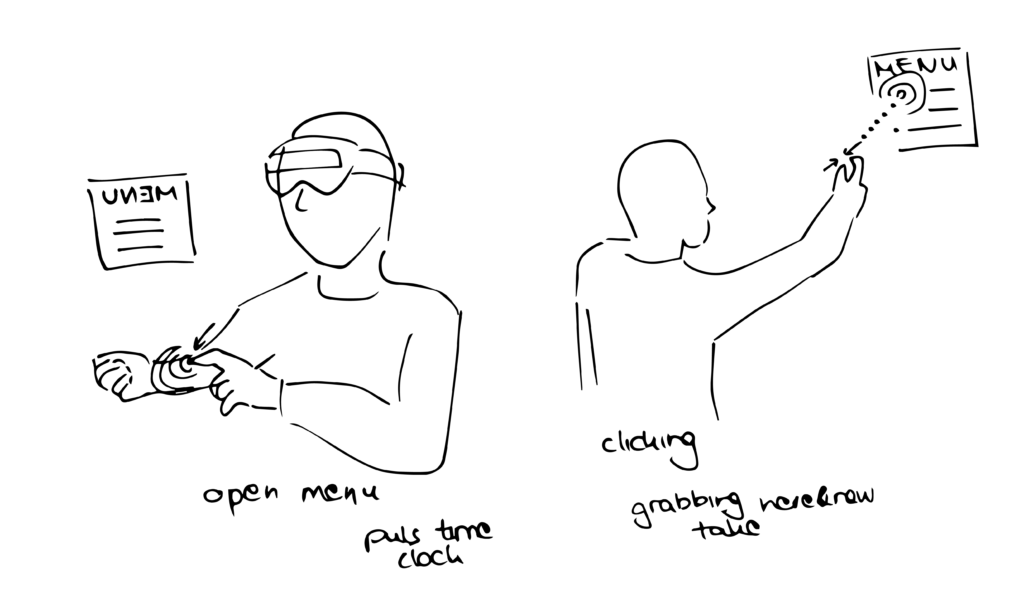

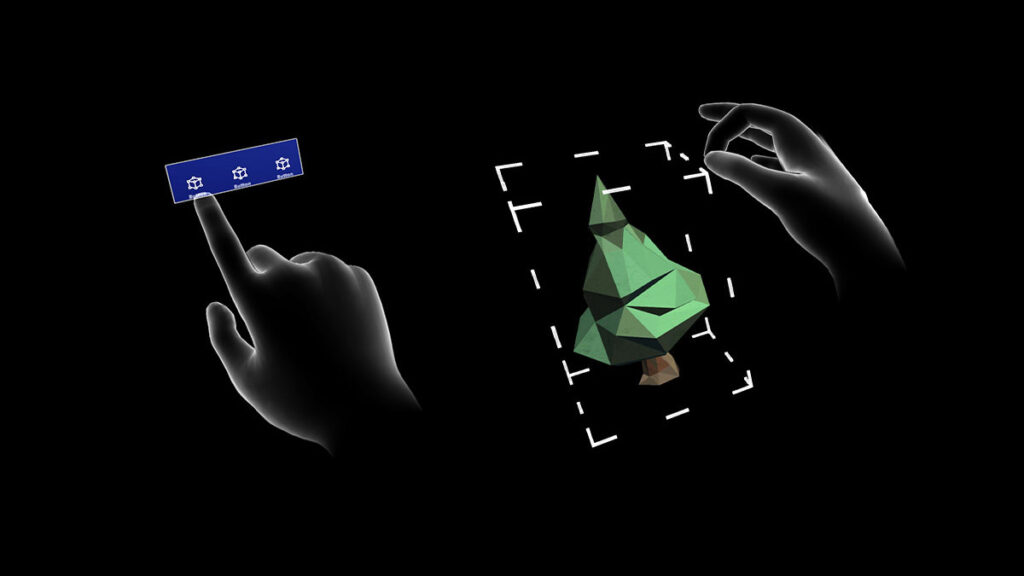

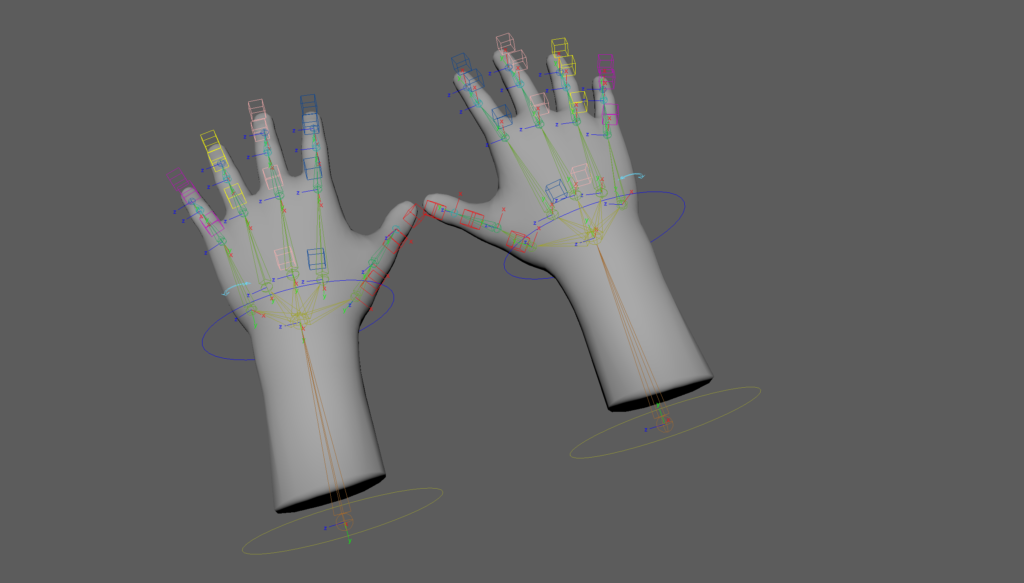

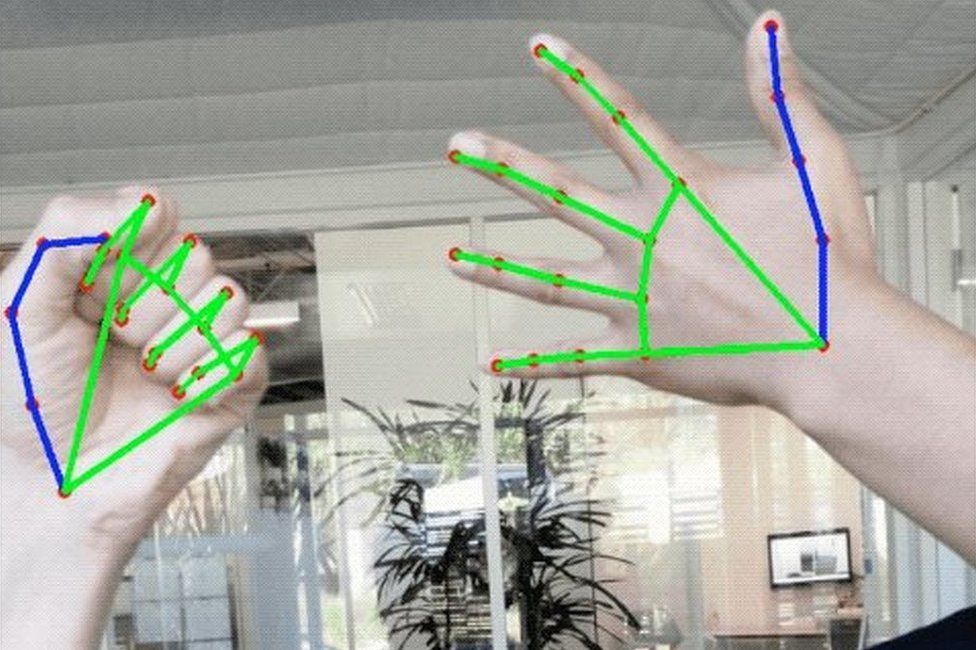

As I told her about my ideas regarding the application, possible features and visualization in the end, she said that she liked the idea of an application for learning sign language and can especially imagine it in the phase of learning vocabulary with it as it is an absolute necessity in her eyes is the contact to native speakers to gain a good level in signing in the further stages of learning. Furthermore she stated that the feedback feature is very important if there is no person next to you telling you if the gesture is done wrong. Regarding the area that should be visible for the gesturing in general, she showed me a few gestures that were not restricted to the main area from the hips to above the head as she thinks that the system can not track a few of the gestures then. For example she showed me the sign for “eyebrow” where you actually slide with your fingers around your own eyebrows as it could be difficult to touch them when you wear the glasses as well the sign for “curious”(touch on the bridge of the nose) as well as “glasses” or everything around the eyes and in the face that you physically touch to relate to it, could be on one side hard to touch or reach under the glasses and on the other side hard to track by the system if they are over the head or under the glasses to give a feedback.

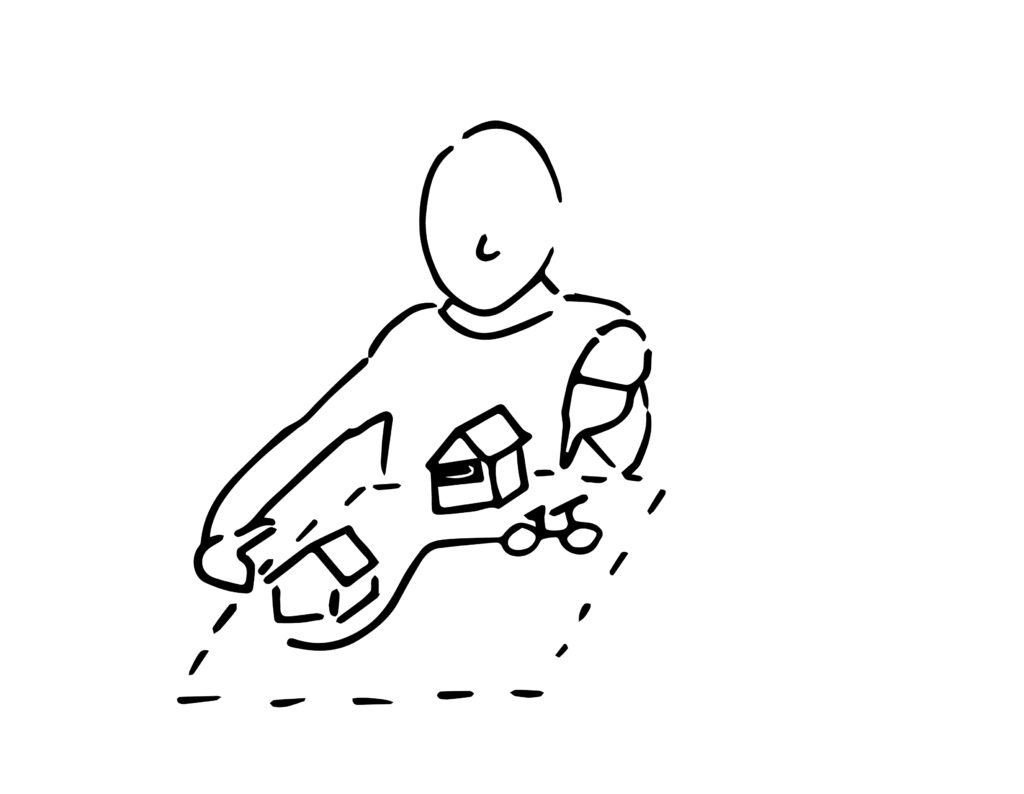

There are classifiers like for example spatial classifiers for “passing the house with the bicycle” where you show the position of yourself on your bicycle and how you move forward in the spatial area by pointing out the positions in the area in front of your own body and sign the house, the bicycle and the passing. The house then serves as a box in the spatial area after you signed the sign for house one time, you then later just relate to it as a point or box in the spatial area. In the sense of “where it is located and in which direction something goes”, one sometimes does not even show the sign for “house” at the beginning, because it occurred in the sentences before and from the context it is obvious that the house is meant.

Regarding tracking she can not imagine in different scenarios (like “Going to grandma’s house but on the way there still going to the bakery” or when talking about friends standing by, just pointing at them without signing their name again) and with other classifiers how this will be possible to track correctly and thinks it will need a lot of deaf to help evolve the tracking systems to bring them to a right level of accuracy so that testing is indispensable. As an example, she names that many deaf people can not even understand what avatars are signing or want to communicate because they can not relate to the movements and translate it to their own style and the mimic of the avatars is too difficult to read although it is decisive for the context. She and her study colleagues talked a lot about the facial expressions and naturalness of avatars. In their eyes it is necessary to test avatars with a lot of deaf people first to help developers to adjust the look of the avatars so deaf people can understand avatars because it would be useful to use avatars for announcements at the train station (delays) or other short notice information as these are currently not even communicated to deaf in most cases.

Conclusion

This interview was very helpful to reassure myself of the outcome of my previous research from the first and this semester as many points which occurred throughout it proved my outcome of the research from the literature and the internet. It helped me to get to know if there is a necessity for my idea of the application in the first place and which aspects I must think about when evolving it. As I did not have much knowledge about the process of the proper education trough universities of organizations which offer courses, I have got new insight into the phases of teaching and methodological approach. I can get more interview partners as she offered me to connect me with her study colleagues as well with especially one who is currently engaging in writing a bachelor thesis on the topic of avatar appearance and understanding which will be a great input for my own thesis as I imagined using avatars in the beginning but are now considering if another visualization would be more suitable and understandable after her explanation. In general, I plan to focus on the visual appearance of the application and how I will structure the application in the next semester. I could send possible visualizations to her study colleagues to let them evaluate them as I see the visualization as a key factor of willingness to use the application or not if it is not done understandable and aesthetic while being helpful.

Sources

https://sites.google.com/site/oegsgaensefuesschen/