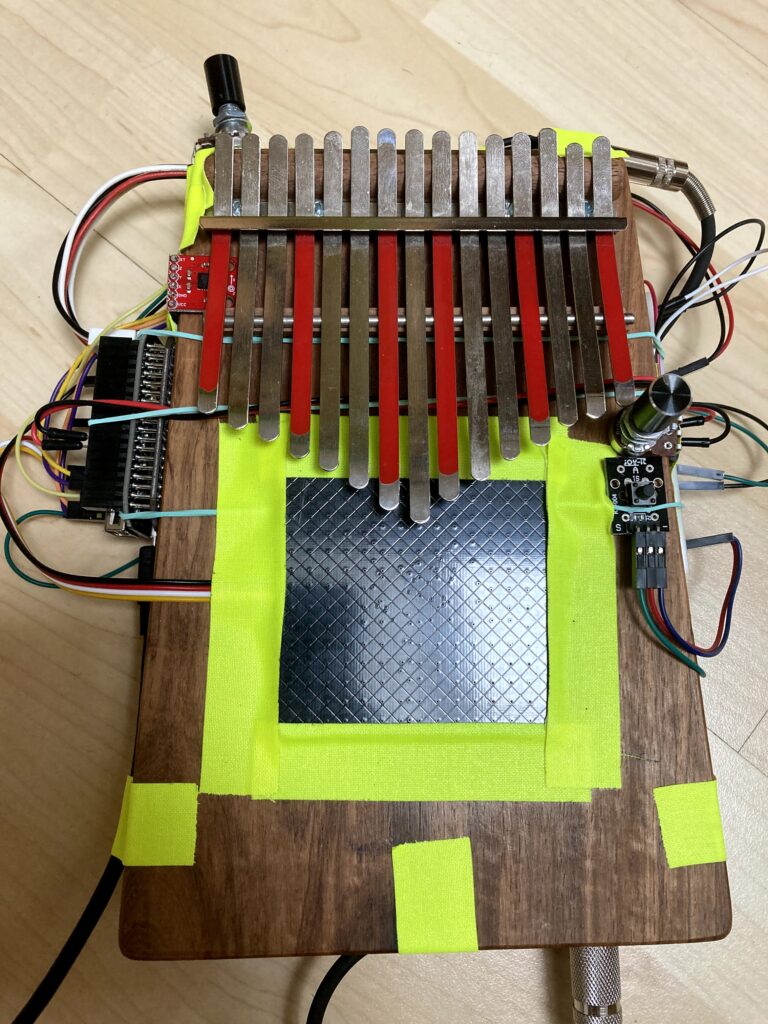

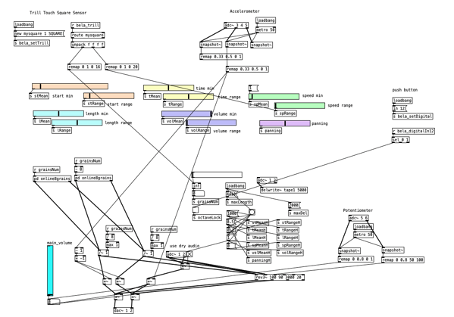

In the following image you can see the main pure data patch that is running on the bela board and over which most of the parameters you can interact with are modulated. The actual granular synthesis is taking place in different subpatches. Now let’s look at the different parameters you can interact with directly on the Kalimba with the sensors.

On the top left corner Pure Data is receiving signals from the touch sensor, which are values between 0 and 1. These values are remapped to fit the needed parameters that we want to modulate. The x-axes of the square touch panel which is from left to right controls the grain number. If your finger touches the panel on the left side, no grain is produced, and you just hear the dry audio signal of the kalimba. The more you slide your finger to the right, the mor grains will appear. On the y-axes you control the speed range of each grain which also means the pitch of course. On the bottom of the panel the grains sound kind of similar and are in a closer pitch range. When you slide your finger upwards much higher and lower grains will appear.

The accelerometer controls the dry/wet signal of the grains and the dry audio signal. When the kalimba is held straight you can hear both the dry signal and the grains equally loud. If you tilt the kalimba to the right, the dry signal fades out and the grains get louder. The exact opposite happens, if the kalimba is tilted to the left side.

In the patch there is a function called Octave Lock which is activated by the small push button. It allows the grains to alter the pitch only in octaves. This is very helpful when you want to play exact harmonies, because otherwise the pitch of the grains is too random.

I also added a reverb object in pure data called the rev3~. The amount of this reverb is controlled via the potentiometer on the right side. The reverb is applied both to the dry signal and the grains. The second potentiometer is controlling the overall volume. It is placed on top because you don’t need the volume knob to be controlled while playing.

Further Steps

The next steps would be to figure out the exact position of the sensors. Some problems appear now for example when you play the kalimba with your thumbs you also strike the touch panel sometimes, which needs to be fixed. Of course, in the final product all the electronics including the bela board will be placed inside of the kalimba and will hopefully look not as chaotic as right now!