As I got to know hand tracking from the HoloLens2 and a little bit trough one of my lectures “Interaction Design”, I wanted to try out the tracking with the LeapMotion Controller. The Leap Motion Controller from the company ultraleap is an optical hand tracking module which captures the movements of hands and fingers even if they move unparallel to each other. It is used for development and deployment of various applications.

Set up

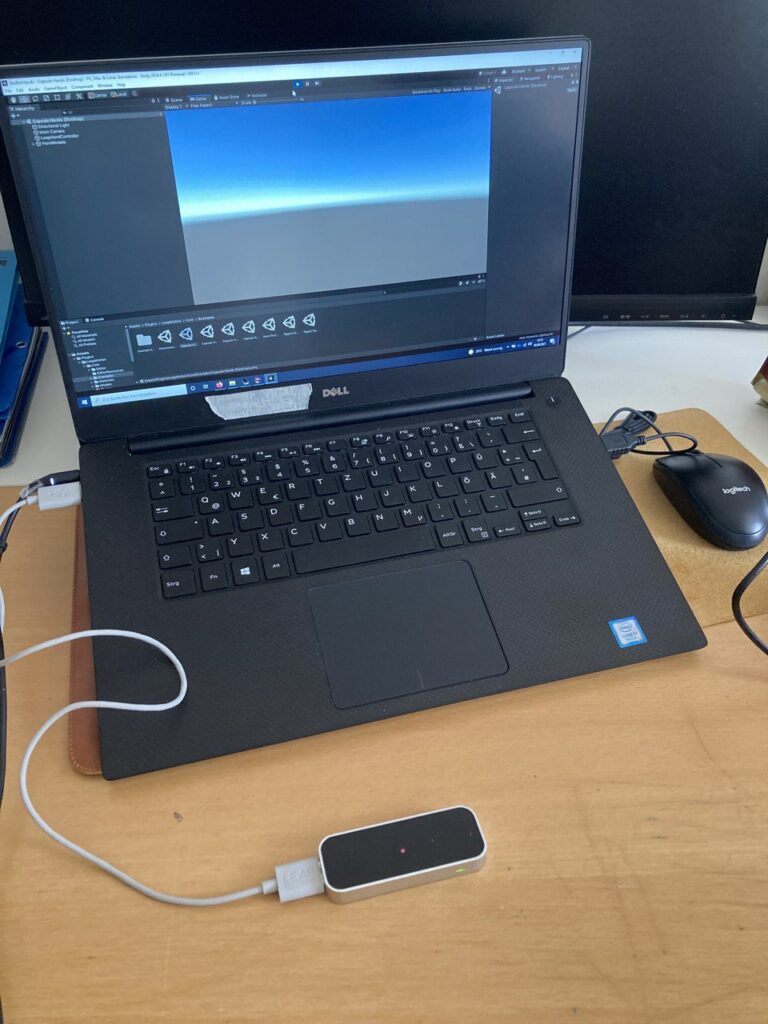

I borrowed out a leapmotion controller at the mediacenter from the FH and downloaded the accompanied software from the website.

You plug in the controller per USB and install it as well. There are various possibilities you can choose from to try out the LeapMotion. As my topic of learning sign language deals with viewing or tracking – not only – but mainly hands, I wanted to see how I can interact and which movements can be tracked preciously. With the information for setting up and trying out with the Unity engine given trough one of the many helpful YouTube videos, and after the installation, you can open Unity. In the program you install the XR Plugin Management under the package manager to prevent problems when downloading the packages for the LeapMotion controller. You import the unity packages that are provided by ultraleap into the assets folder and can try out the different examples given.

Try out and conclusion

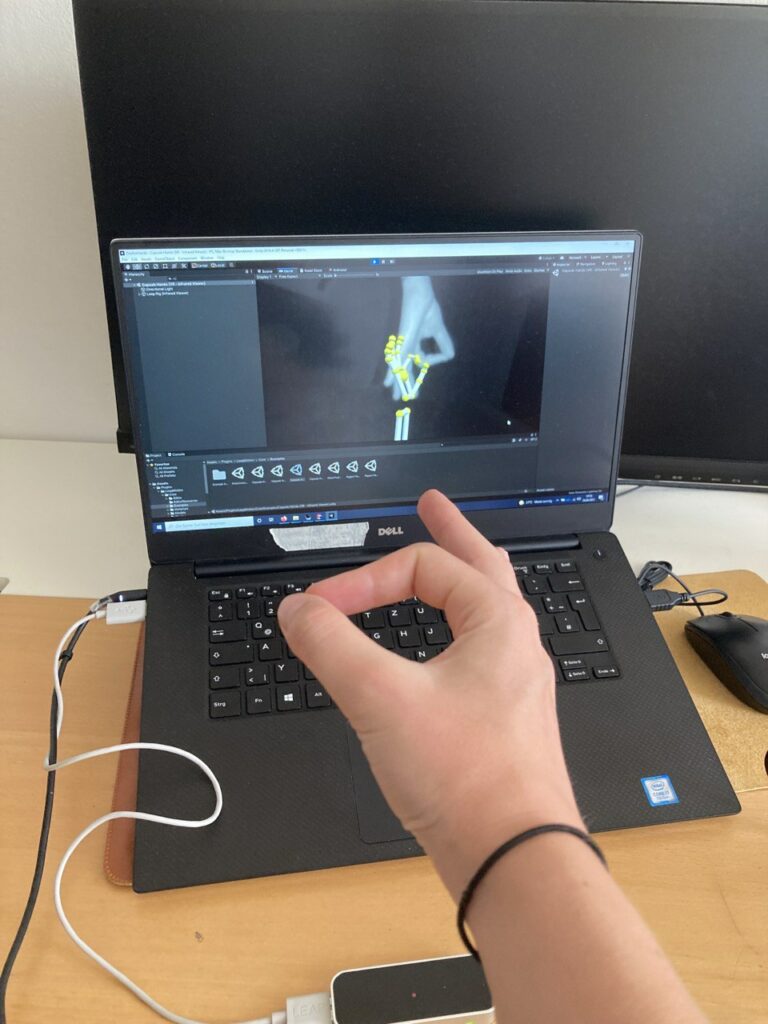

It was very helpful for me to try out specific movements for a better understanding of how systems are tracking and which parts of the hand brings the most valid information for the systems to possibly translate it into sign language.

I got to know that the parallel tracking of both hands works very well but there are some difficulties for the controller to track preciously when the signs are to complex and have many overlapping fingers or else. After just randomly moving and changing the positions of my hands and the fingers, I tried to spell my first name consisting of five letters. The controller tracked three of them without any problems but with the other two, it could not recognize the position of the thumb or index finger and this is why it showed a different gesture than the one I was doing. I tried to readjust it by turning or giving the controller itself another angle to read the gesture as well as turning the hands around to view it from the other side but it always set back to the wrongly tracked gesture.

Heuristics for LeapMotion Interaction

As already mentioned a couple of years ago from the LeapMotion developers, it is important to evolve applications with the focus on the following points which I can now relate to and understand better after trying out the LeapMotion:

1. consistency in tracking: working constantly on accuracy and consistency of tracking by having multiple people perform actions, motions and gestures

2. ease of detection: create a concept of how easy the motions are detected and what is important to detect as obvious conditions which define the motion but are separated from other things around which could also be detected

3. occlusion: the controller should be able to detect the environment but if the sensor is covered by physical objects, tracking is not accurate or not possible at all. If motions involve occluded parts, the system can not visibly see a part of the hand and makes assumptions trough available data. A diagnostic visualizer can help to test a variety of detectable areas around the field of view to prevent occlusion.

4. ergonomics: improving posture and working environments but not on a physical object. A consideration of affordances and guestures adjusted to the movements of the humans and users body without harming or straining is necessary by having the environment and comfortable positions on mind.

5. transitions: creation of a concept for the interaction with the application. Every interaction should be defined and differ so that the gesture can be detected from the system as well as be more memorable for the user. If actions have similar results the usage of slightly similar interactions is acceptable, otherwise they should differ. It depends on the beginning and ending of a motion in the space in front of the body if the user can perform the action in the air easily. It therefor should be looked for minimizing awkward switches by implementing an “initialization” or “resting” pose which initializes actions.

6. feedback: consideration of how providing a feedback from the application to the user who is performing the gesture because a lack of hardware-based physical feedback in motion-based interactions can leave the user unknowing of the current state of the application and state of the system. The user interface should communicate three things regarding the users interaction: Where am I now? Where do I need to be to complete? How fare and in what way do I need to move?

With the programm Unity I can in the end evolve the whole setup of a possible application as I already informed myself in the last semester as well as in this one about the developement of the final product. There are many possibilities how to do so, so that it is necessary to first develop a concept and designing the experience and interactions beforehand.

source:

https://medium.com/@LeapMotion/6-principles-of-leap-motion-interaction-design-98bddff77a53