Trying out the HoloLens 2 for the first time as I have never tried out AR glasses ever before.

The experience itself was very immersive and I had great fun playing a game after adjusting the glasses to my head and vision. While shooting robot spiders served the purpose of having fun and had nothing to do with the project itself or an education app, it was interesting to get to know the navigation of the interface and remember different commands for navigating through the experience. It brought my attention to think about signs in general and overlaps between signs of the navigation with sign language sings as well as the whole interaction with AR objects by using gestures.

There are multiple human gestures and movements for free-form systems for navigating interfaces. I reviewed the book of Designing Gestural Interfaces by Dan Saffer from 2008. While this book was published more than ten years ago, it still helped to get an overview due to a lot of pages which showed pictures of a person doing different movements with the hands and the whole upper body so that for example the system is reacting by cooling the temperature down. It was a good first impression of the multiple possibilities that are given to interact with the body.

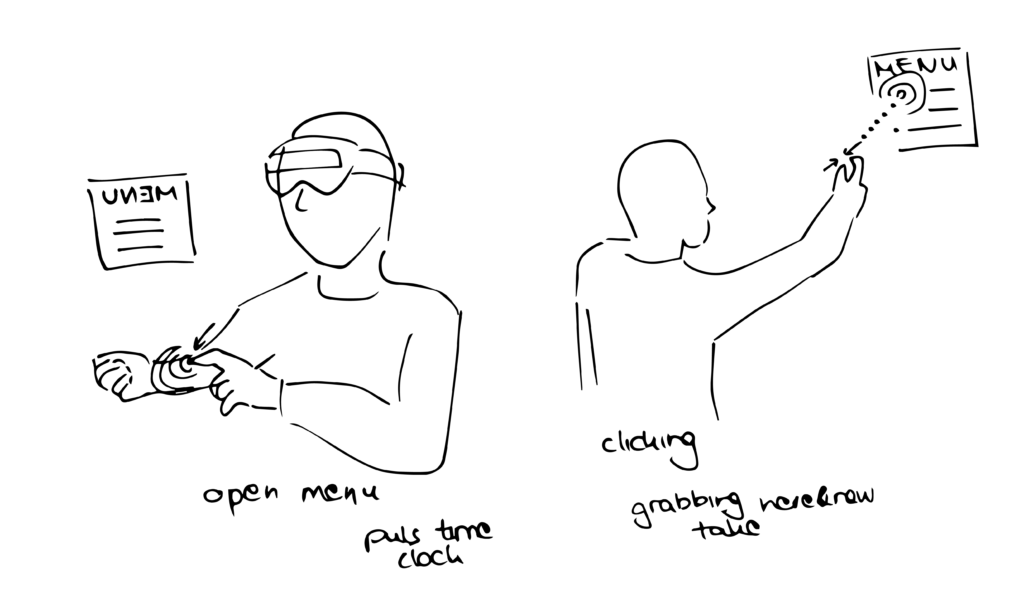

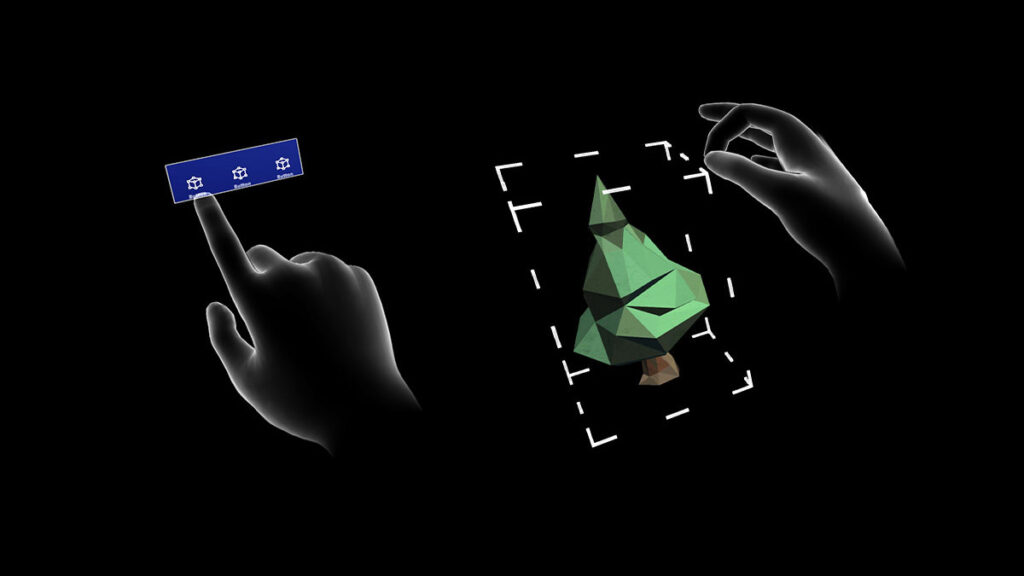

Today you can interact with the Holograms in many different basic actions and gestures like dragging, dropping, clicking, holding and releasing to get effects like scaling, rotating, repositioning and more. The hand-tracking in Microsoft HoloLens 2 provides instinctual interaction so that the user can select and position holograms by using direct touch as if he would touch it in real life. Other than the possibility of direct touching you can use hand rays to interact with holograms which are out of reach. I recognized that you have to get used to use your own body very quickly. For example you often want to open the menu so you have to tap on your arm. Another often used interaction in my experience was lifting the arm to set up the hand ray to then click on the holograms by putting the thumb and index finger together as seen above.

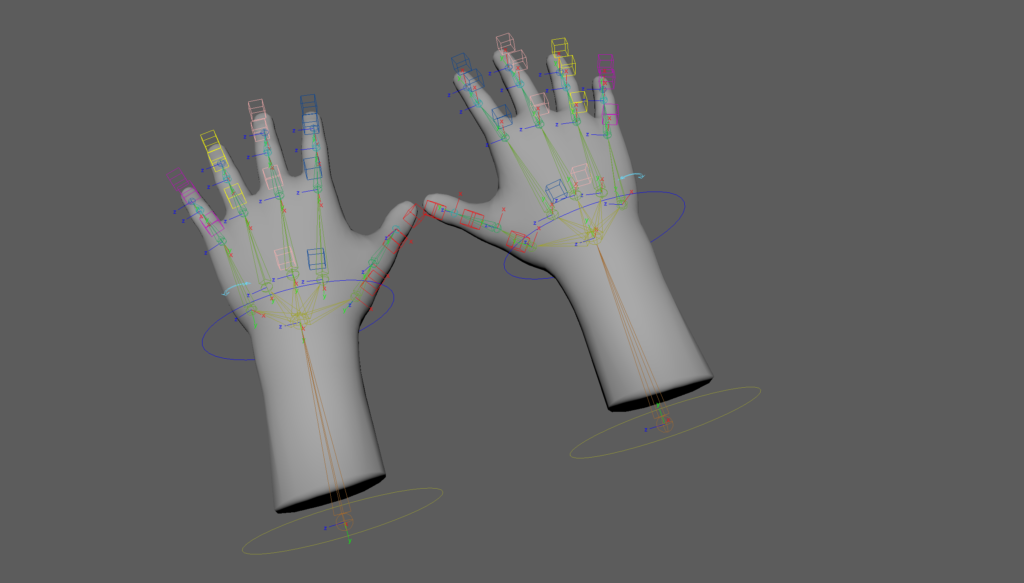

Looking into the Interaction Guides from Microsoft you can see which components are or can be taught to the users and how users are guided to learn gestures. This is done by viewing a hand coach which is just a 3D modeled hand repeatedly showing the gesture until the user’s hands are detected by the system and starts to imitate the movement. This means if the user does not interact with the hologram/component for a period of time, the hand coach will start to demonstrate the correct hand and finger placement again until the user understands the process. Interesting for my project is to to how the user gets educated just by hands and it was helpful for me to get to know how I can create my own hand coach as these information are provided in this guidelines as well by naming programs like unity as well giving the opportunity to download an asset of the hands to create an own controller setup.

It needs practice to learn to navigate through these AR tools and systems but it becomes clear that in this scenario people show willingness and technical interest to imitate gestures to get control over something. They are willing to get used to the navigation through gestures and learn to get to know them after a while until they remember them completly. After stating the issue the SAIL team talks about in my last post about that the depth perception must be learned for first time learners who are not used to use the space in front of themselves or the own body to communicate, it gives me hope that by giving the right amount of information and instruction AR will help people to overcome the phase where they use their body for communication more easily if they are interested into learning new interaction methods.

Sources:

Saffer Dan: Designing Gestural Interfaces, 2008, p.210-232

https://docs.microsoft.com/en-us/dynamics365/mixed-reality/guides/authoring-gestures-hl2

https://docs.microsoft.com/en-us/windows/mixed-reality/design/hand-coach