Description

I’m clumsy, it’s one of my characteristics and it’s always the first-word people use to describe me.

I thought a lot about this word and ended up asking myself the question: Is it me who is clumsy or my interactions? So what is a clumsy interaction? For me, it includes all interactions that result in a different outcome than expected, whether it is a man-to-man or man-to-machine relationship.

I wondered where this awkwardness could come from, and if it was inappropriate behavior? Indeed, when we consider something different or have difficulty understanding it, we try to adapt our behavior and this adaptation is not always successful and this is what I would call awkward interaction.

In some cases, the adaptation is quick and after two or three clumsinesses, our behavior becomes adequate, while in other cases, the adaptation seems impossible and the clumsinesses are recurrent.

By studying a human’s behavior, we can understand how he functions and the situations he has difficulty coping with. Through this research, I plan to use Behavioural Design to better understand the subject.

Motivations

What interests me about interaction design is that it is centered on the human, his behavior, and the way he interacts with his environment, both real-time and digital.

My main motivation for this subject is above all to understand human behavior because before analyzing interactions that can be awkward, it is necessary to understand how humans interact. It is also to understand their relationship to the object, how it is characterized because it is a key element in the appearance of awkwardness.

Introduction

I decided to focus on this research on clumsy interactions between humans and machines or between humans and objects. I am trying to understand where this awkwardness comes from, at what level it appears, and what the factors are. Here are different examples and scenarios that lead me to the main questioning of my research.

Understanding clumsy interaction

First example

We have an older person, she uses her phone, and like many people her age, she has difficulty understanding all the possible uses. Her interaction with the object is limited by her lack of knowledge, not intuitive of the object and this creates awkwardness.

Exactly the opposite of this scenario we have the interactions between children and smartphones. These interactions are intuitive and above all too important. Where older people will have difficulty in appropriating the object, children, digital natives, will make it an extension of themselves. And in that, it is also a clumsy interaction because a smartphone is there to be used as intelligently as reasonably.

Let’s now talk about Beatrice Schneider’s Tody concept, which focuses on this subject by creating a product that makes the link with the phone and aims to reduce the time of use of smartphones by children. This small object serves as a vector between the family, the child, and the phone.

What is also very interesting with this product is the fact that it is equipped with two eyes, a mouth, and four legs, which makes it immediately more alive.

All this information leads me to a first question:

What is the impact of society and new technologies on our interactions and behaviors, according to our profile?

Second example

Let’s now take as a reference a garbage can and the attitude we have towards it. It is an everyday object, yet our consideration of this object is negative, we tend to find it dirty and we don’t particularly like to interact with it.

Objects are the basis of our everyday interactions, but more than objects, machines are also present in our daily lives. It is important to see that today we try to minimize the discomfort and awkwardness in our contact with objects and machines through personification.

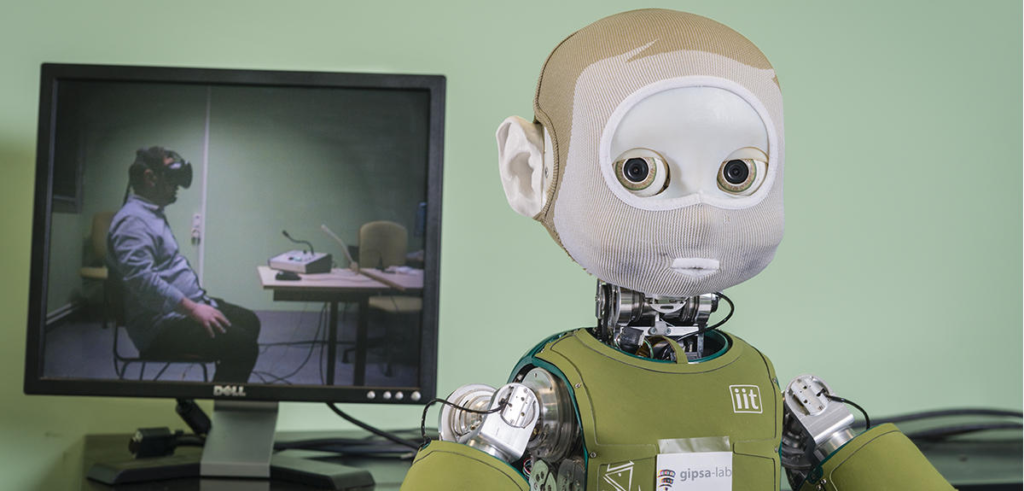

Let’s take the example of the robot Nina from the CNRS, which aims to assist people and help them in their daily life. What is interesting with this robot is that it has been given a personality and humanity through its face. He has lips, articulated jaw, irises, eyelids and is animated to reproduce facial expressions. This “human” appearance will not theoretically be of any use to the robot, yet it will allow people to perceive him differently and thus give him a real place in everyday life.

A well-known example of the implementation of human behavior in a robot is the movie Wally, where we are shown the history and “life” of a robot, we can feel emotions and empathy when looking at it because we can clearly see eyes and a head.

All this information leads me to a second question:

How do we consider objects through our interactions?