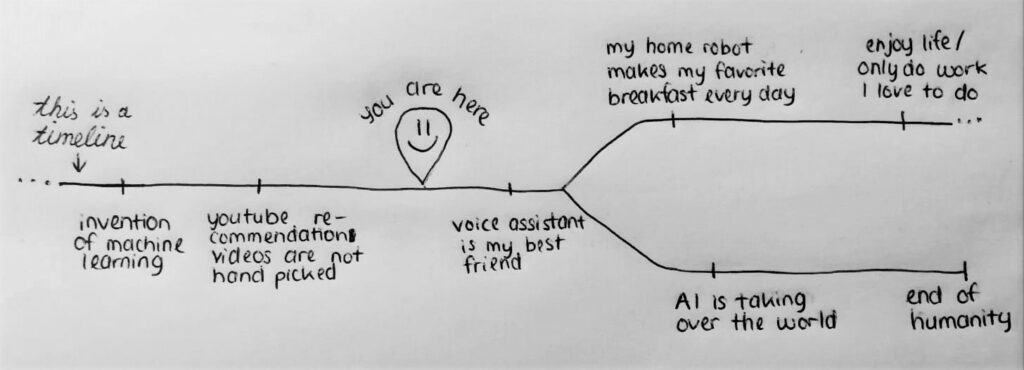

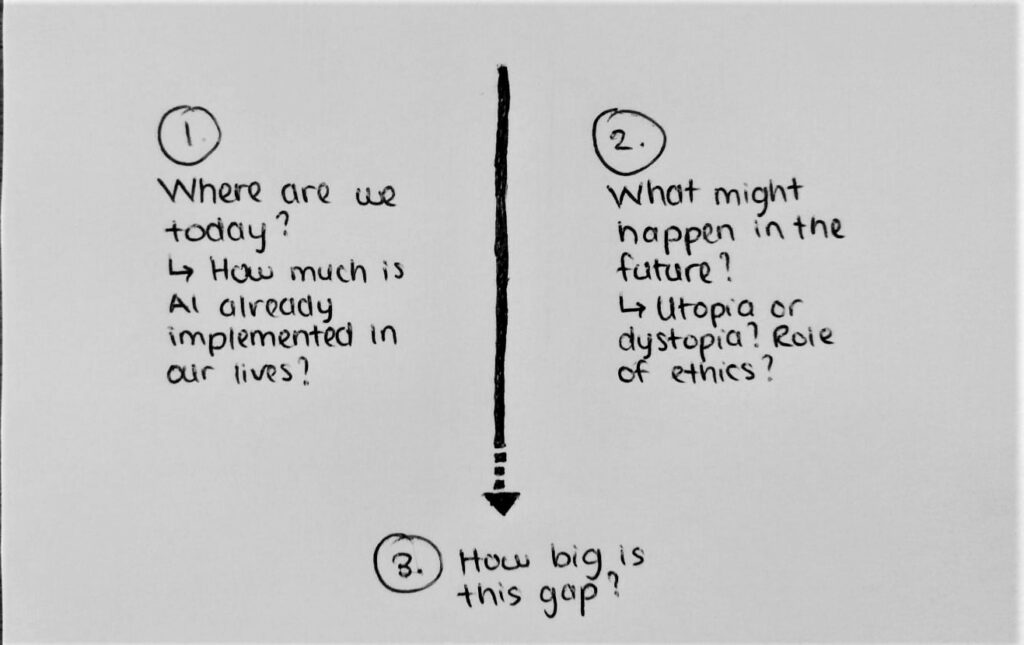

In the last blogpost we had a look on how we as designers could use artificial intelligence (AI) in our work. For this post, we need to get back to the first question: will AI take over the world and kill us all?

I talked to my friend Michael Meindl, who is doing his PhD in the field of artificial intelligence. Right now, he is doing research on how robots and machines learn movement and how different parts of a machine can communicate with one another, just like a human body would to. He uses machine learning to make the communication within the system possible. His research will probably be used in the medical field, for example for prostheses. For me he is the smartest friend I have (though the competition isn’t really hard since I’m friends with lots of lovable idiots). I asked him what he thinks about the future of AI, what this means for us and of course if humanity will get destroyed by this technology.

He stated that if we look at how AI is discussed in the media, we are talking about the wrong matters and trying to handle problems which might never come into place. The thinking about AI is formed by sci-fiction books and movies, moreover, the misconception that a machine might have human attitudes or interest. The following article is based on the conversation I had with Michi.

Often, we hear about the crazy short time it takes for an AI to learn a new game. People consider this means that AI is a super quick method to learn things. But we need to take the years of research and programming into account. Even if you have two AI’s which play different games and want to merge them together, it takes years of work to get that job done. Also, the method of how an AI is learning new things, seems kind of odd when we think about it. If a human would want to learn how to play chess by playing it a thousand times and just trying out moves over and over again, to see if he can win the game like that, you’d consider him as stupid. But that’s what a machine learning algorithm is doing. Since we don’t even really understand how human intelligence functions, how shall we create an artificial general intelligence (AGI)?

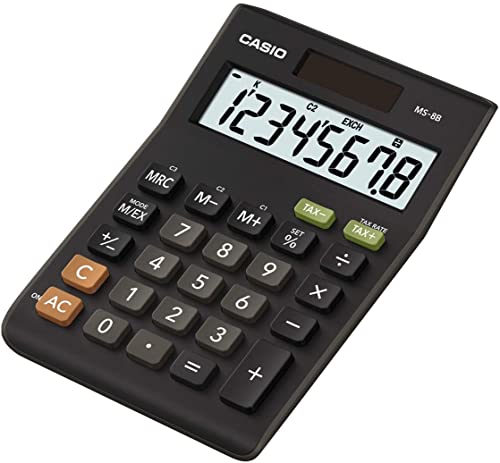

Is this calculator an AI?

Back then people might have said yes, now maybe no. This example shows that the definition of intelligence is sometimes a very subjective matter. Some calculations we type in might be difficult to solve, but in the end this system just follows given commands. Is that intelligent?

We have kind of a problem when we think about the definition of intelligence. Actually, an AI just does what it is told to do. It follows given commands. This sometimes looks to us as if the system is intelligent. The real intelligent thing about this instruction-following system, is the algorithm which makes that system follow instructions. If a calculator doesn’t seem like an AI to you, then also a self-driving car shouldn’t. Just like a calculator, it follows commands and instructions.