In this article we are going to talk about curiosity, this faculty that allows us to be interested in the environment that surrounds us and that could prove to be a key element in our way of apprehending the latter and thus in the understanding of clumsy interactions.

What is curiosity?

Curiosity is described as a “tendency to learn, to know new and hidden things” by the Robert dictionary. It is a faculty that we exercise in different ways and that can be stimulated, these are its first characteristics that we are going to study.

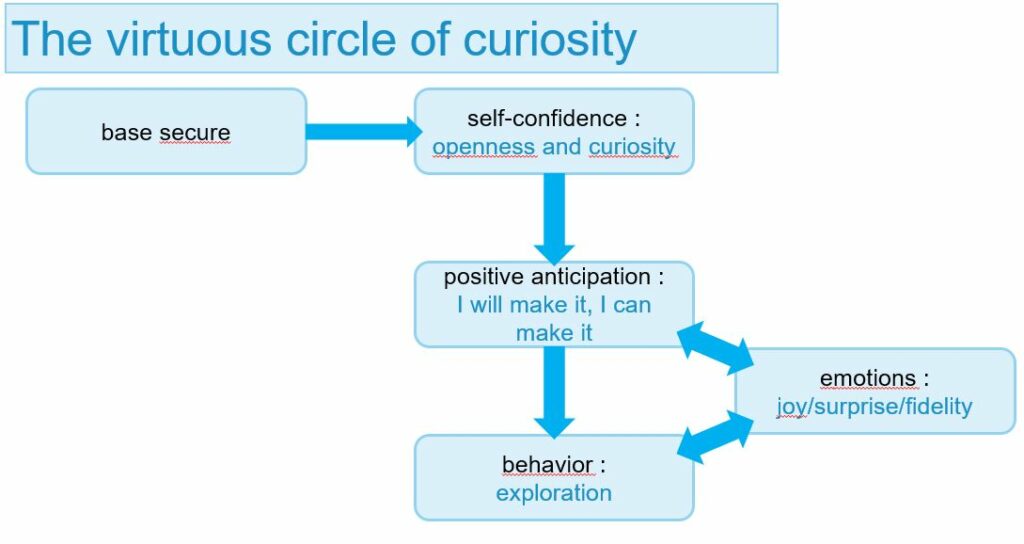

First of all, we can establish that curiosity is an innate quality that we all possess and have had since birth. Indeed when we are babies it is this faculty that makes us want to experiment and interact with the objects that surround us through our touch and our taste. When we observe a baby with a toy he is most of the time testing it either by shaking it or by putting it in his mouth, by doing this he explores the object. This phase of experimentation and study of the object, the actions it can perform with it and its effects are called “the stage of active experimentation” by Jean Piaget. It is very important to understand that curiosity is an innate faculty that develops over time and that depending on the context we will not all have the same level of curiosity. One of the key elements in its development comes from the presence or not of a “secure base”, it is its presence that will, for example, reassure a child and give him the confidence to go exploring. During childhood, this secure base often corresponds to the parents, if they are not there the child will not try to venture into a new environment because he will consider it potentially dangerous. The relationship with our parents can also influence the development of curiosity, if this secure base is not stable then the child will be only slightly curious. We can also note that this secure base evolves over time and can become a group of friends, a spouse, co-workers, etc …

The second source of the development of curiosity will have an important role in awkward interactions, it is the result of interactions with the environment. Through the environment, curiosity is awakened and reinforced in the child through positive emotions that follow successes in his explorations. Indeed, when a child begins to interact with what surrounds him, he discovers that among the multiple interactions he experiences, some generate positive sensations and emotions. For example, by putting a pacifier in his mouth he will realize that this contact generates a sensation of pleasure, he will want to find or reproduce this sensation by bringing other objects to his lips. As Jean Piaget has shown, we can only evolve thanks to and through exchanges with our environment. If curiosity is essential to the discovery of our environment, the latter is essential to the development of our capacities. Let’s take the example of language, we are pre-programmed to develop it yet without an environment requiring communication it is tough to acquire it.

Let us recall the important elements of the development of curiosity:

- the secure base gives the human being confidence to explore his environment for the first time.

- Interactions with the environment are a source of emotions, when the emotion generated is positive it makes one want to start again.

What is the place of curiosity in the awkward interactions of everyday life?

We have seen that curiosity is both an innate and learned capacity, we have explained its development in childhood but what about its place in our adult life and in our daily life. We will therefore study this curiosity in our daily life through 2 aspects, the first corresponds to the appearance of a novelty, and the second to a habit.

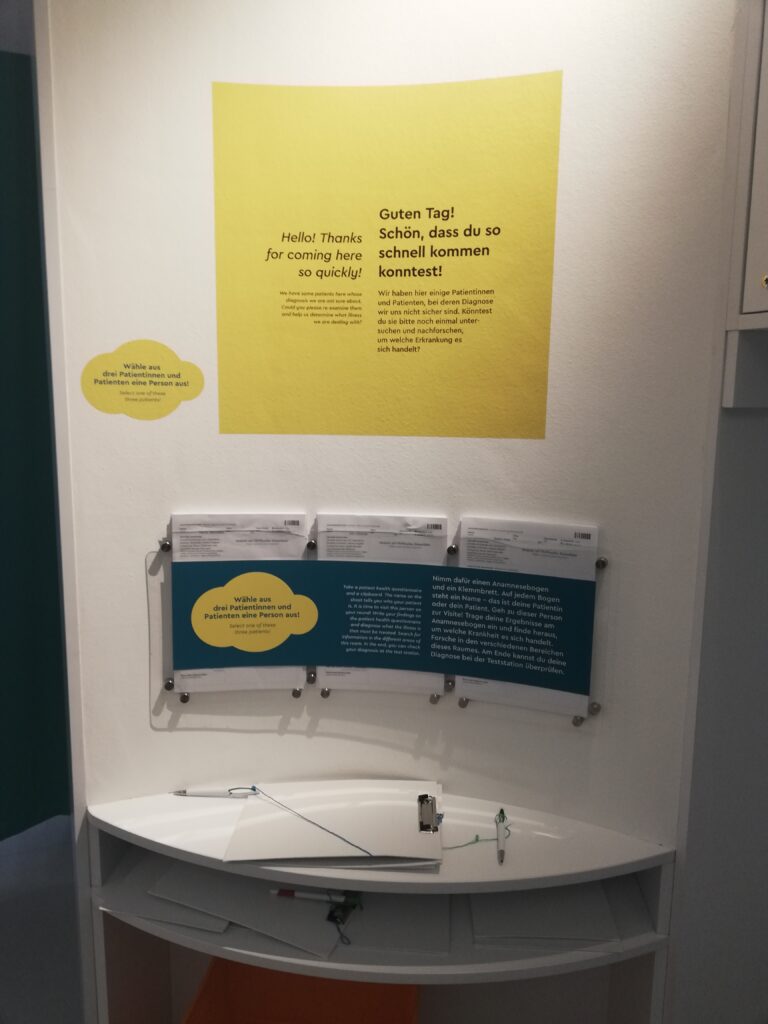

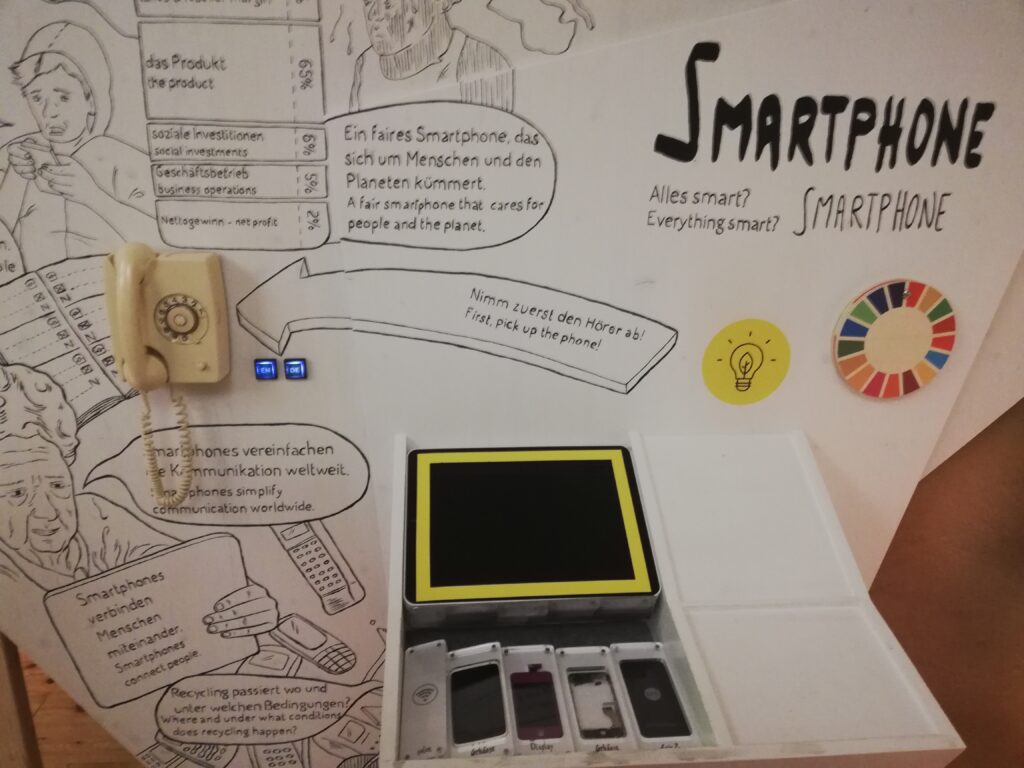

Facing something new

In our lives, we have all the time the opportunity to experience new things, new activities, to use new objects. Curiosity is a key element that allows us to progress, invent and innovate. However, when we are faced with something new, the behavior we adopt is not always that of someone who is curious. On the contrary, we can be rather refractory for various reasons. The first one is due to our brain which likes to save itself and therefore does not necessarily appreciate the novelty that will require it to use cognitive resources for understanding. For example, I will persuade myself to buy the same model of the coffee maker because the others are full of new functions that will be useless to me, but the real reason is that I don’t want to waste energy learning how to use a new coffee maker.

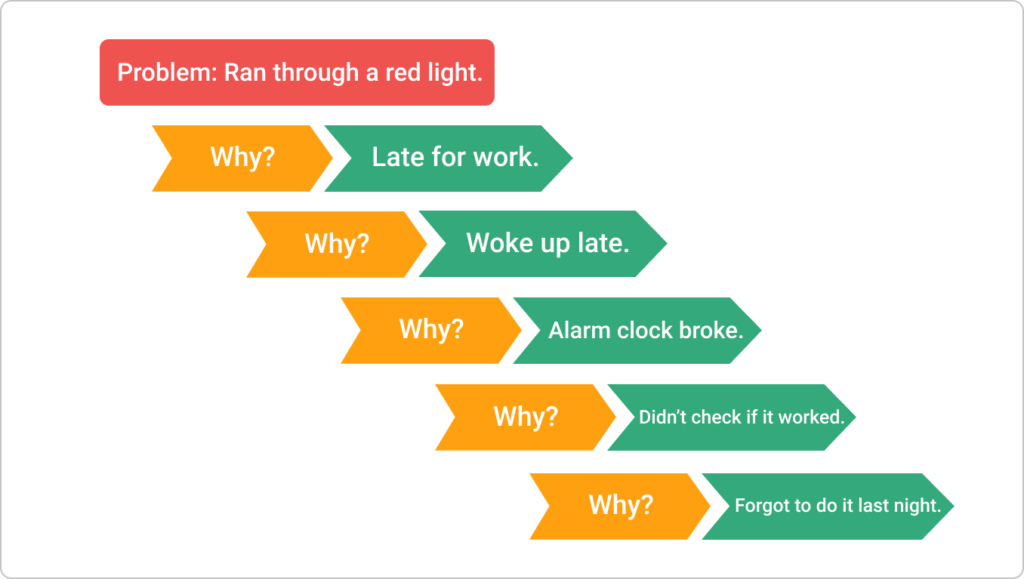

The second is negative anticipation of what might happen, in which case we prefer not to change anything for fear that this novelty might trigger negative emotions or sensations. This negative anticipation blocks curiosity. For example, a new printer has just arrived in our company, we have received like all the other employees its instructions and have read them. If we anticipate a bad manipulation on our part when we first use this printer, we can remain blocked until one of our colleagues has shown us how to do so. We see here that negative anticipation abolishes all confidence, we anticipate a clumsy interaction that does not yet exist.

Curiosity in our daily life

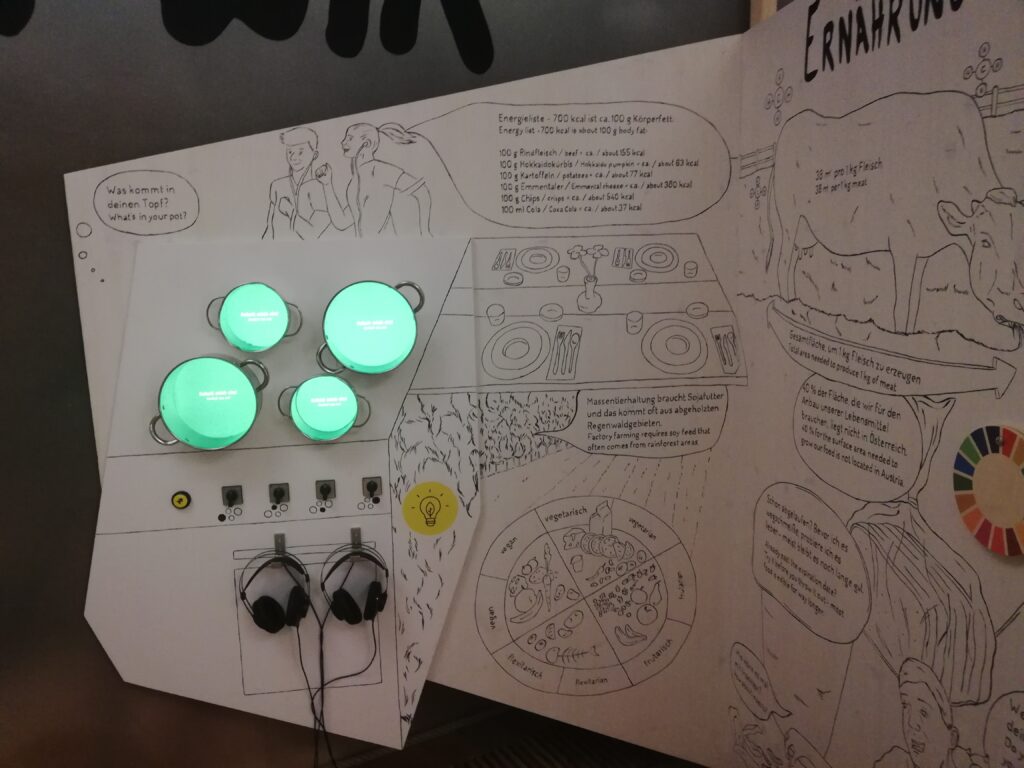

In our everyday life we use a lot of objects but why? Primitive man developed an “interested” curiosity, his objective was to acquire a knowledge of the environment sufficiently important to be able to use it afterward by bending it to his requirements. That’s how objects were born and since then we have been progressing year after year to create more and more sophisticated ones. This curiosity that pushes us to invent allows us to feel a feeling of pleasure when we manage to build the object and then to make it work. It is this same pleasure, this same pride that we feel when we find an answer to a question or a problem. We have learned through curiosity to create and master new objects, but nowadays, with the number of objects that populate our lives, we do not necessarily feel this pleasure towards all the objects we possess. Here is an example, my parents have a washing machine, my mother learned to use it quite quickly while my father has not developed any interest in it. Today, he doesn’t master it and systematically asks my mother which button he has to press to start the washing machine. As he has no feeling of satisfaction when he interacts with this washing machine he has no curiosity about how it works, unlike my mother who, when she turns on a machine, feels satisfaction in mocking a line on her to-do list. That’s how we all work, we don’t master all the everyday objects by choice, but only what interests us. This is a clumsy interaction due to a lack of voluntary curiosity.

Conclusion

In this article, we have seen that our level of curiosity is related to the emotions generated by our interactions with our environment. It is interesting to deepen the link between emotion and object.

Definition, in progress

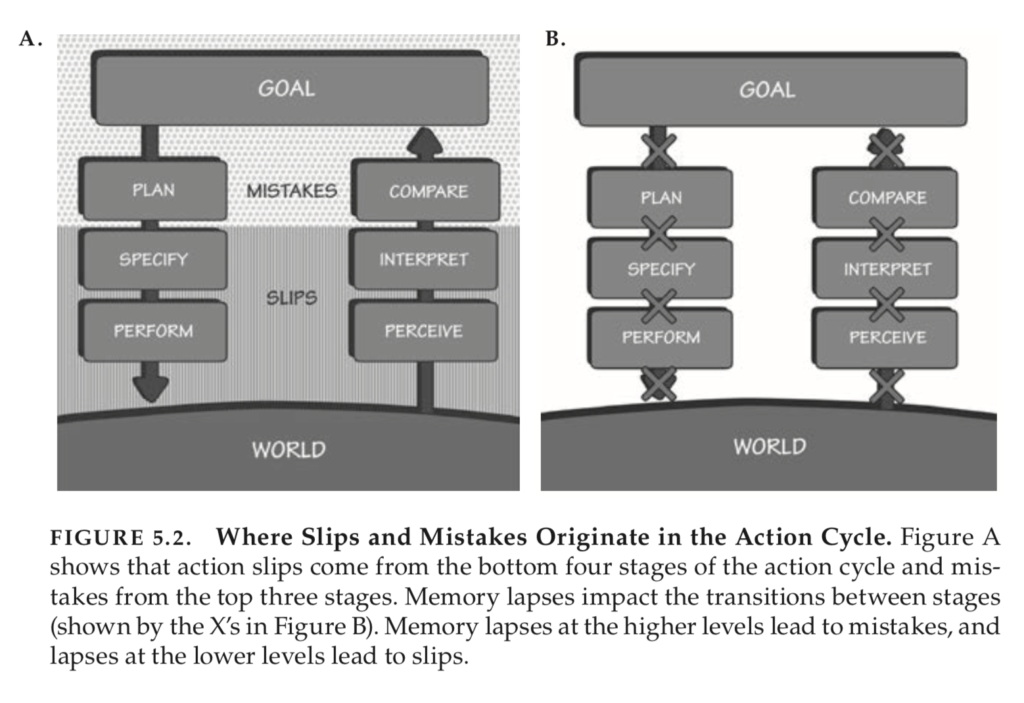

- A Clumsy interaction doesn’t happen at the moment we use the object, it was there before and can come from the designer and his personal vision of the use of the object.

- A Clumsy interaction can depend on the conception of an object and more specifically on the design of the experience related to this object when trying to manipulate it, activate it, make it work, and understand it.

- A Clumsy interaction has several causes, one of which is mostly conceptual. When the origin of the awkward interaction is inappropriate and deliberate behavior, it is then a human error of the user.

- A Clumsy interaction can be the result of a lack of curiosity.

Sources :

Book: Les Pouvoirs de la curiosité, Flavia Mannocci, 2016

Article: Jean Piaget, Wikipedia