We still don’t know 100% what effects, specific to VR for example, can have on our health or on our brain in the long term. An MRI of the brain while using it cannot be done with VR because the head has to be kept still and that turns out to be a bit more difficult in this case. Using VR has many good aspects such as overcoming trauma, can bring out a realistic level of empathy, reduce pain or even cure phobias. However, stimulus overload can also, in the worst case scenario, lead to creating a new trauma. The warnings, requests for breaks, age limits, or the need to sign documents before using VR cannot be ignored or accepted as careless under any circumstances. This is precisely why it is important to consider in advance what technology to use for what purpose and whether a positive goal can be achieved with it.

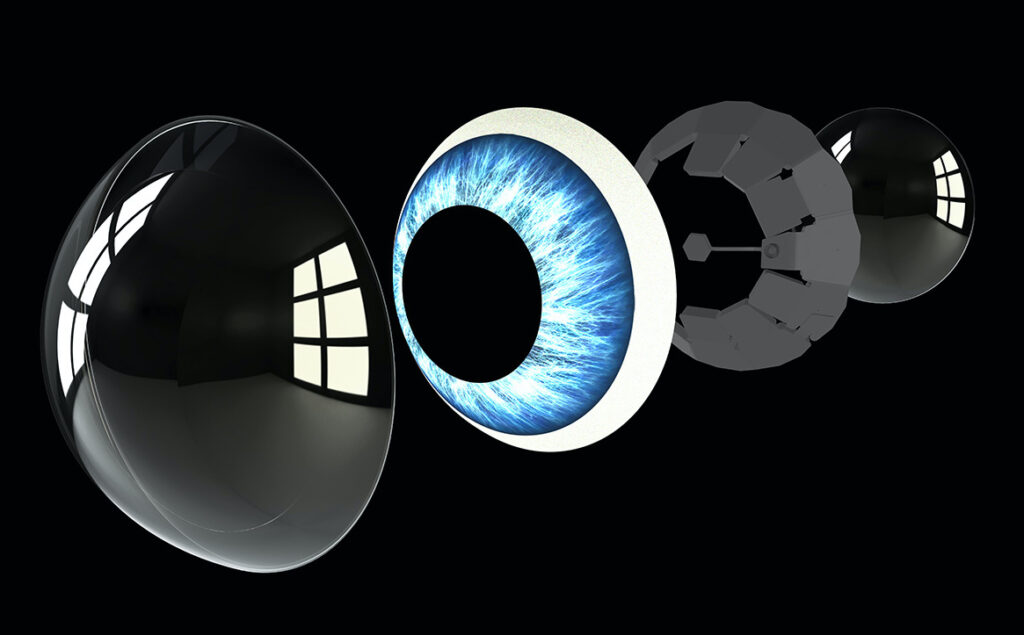

In the process of my research, I was mainly interested in headsets, glasses or other things that are relatively easy to put on, as safe as possible to use and perhaps don’t completely exclude your own real environment or at least are easy to take off. Which technology, headset or glasses will end up being the best fit for my project will become clear over time. So here is a small selection of technologies that are interesting for me at the moment:

The Mixed-Reality-Headset Microsoft Hololens 2

- This headset is put on and tightened with the help of a knob and a headband

- Eye tracking

- The headset does not need to be taken off because the visor can be folded upwards

- Display does not have to be precisely aligned with the eyes to work due to the technology used (laser, mirror, waveguides) in the glasses

- Not yet immersive enough for the normal consumer

The first standalone mixed reality glasses Lynx-R1

- Does not require external tracking sensors or other devices

- Optical hand tracking

- Digital elements can be added to a real-time filmed environment by two cameras on the display

- VR and AR at the same time

- Multiple cameras are used to film the environment

Small VR glasses from Panasonic

- Ordinary, commercially available VR glasses are much bigger and bulkier

- Stereo speakers are integrated

- Is put on like a normal pair of glasses

- Spatial tracking

- Positional tracking through inside-out tracking cameras by tracking the position of the head mounted display and that of the controller

AR glasses Rokid Vision 2

- Must be connected with a cable to smartphone, laptop or tablet

- Is put on like a normal pair of glasses

- Has Speakers for stereo sound

- The glasses will be operated by voice control

- There are specially developed scenarios, such as a Fantasy World. This is an immersive space in which the user can interact with the world through head-, gesture- or voice control

- The user can move freely in the virtual space through room tracking

- No word yet on when it will hit the market

VR Arcade Game from The VOID or Sandbox VR

The VOID and Sandbox VR are actually both on the verge of going out of business. Due to the Corona crisis, all arcades had to close, and at the VOID, Disney withdrew several important licenses, such as Star Wars, because the company could not pay for them due to expensive equipment and associated debts. Still, the concept behind it is very exciting. Here are a few key points from The VOID:

- Through a headset, motion capture cameras, 3D precision body tracking, haptic suits, props like flashlights or blasters meant to represent a weapon, one can explore the game in the physical environment and interact with a virtual world simultaneously

- Fully immersive through VR and at the same time physical in the game by making virtual objects resemble physical objects

- So you are immersed in the game as the main character, and depending on the virtual world and story, you have to complete certain tasks as a team

Sources

- Microsoft’s Hololens 2: A $3,500 Mixed Reality Headset for the factory, not the living room, Dieter Bohn (24.2.2019), https://www.theverge.com/2019/2/24/18235460/microsoft-hololens-2-price-specs-mixed-reality-ar-vr-business-work-features-mwc-2019

- Lynx-R1: Erste autarke Mixed-Reality-Brille vorgestellt, Tomislav Bezmalinovic (4.2.2020), https://mixed.de/lynx-r1-erste-autarke-mixed-reality-brille-vorgestellt/

- CES 2021: Panasonic zeigt extra-schlanke VR-Brille, Tomislav Bezmalinovic (12.1.2021), https://mixed.de/ces-2021-panasonic-zeigt-extra-schlanke-vr-brille/

- Rokid Vision 2: AR-Brille kommt in neuem Design, Tomislav Bezmalinovic (15.1.2021), https://mixed.de/rokid-vision-2-ar-brille-kommt-in-neuem-design/

- The VOID (2021), http://www.thevoid.com/what-is-the-void/

- The Void: Highend-VR-Arcade steht vor dem Aus, Tomislav Bezmalinovic (17.11.2020), https://mixed.de/the-void-highend-vr-arcade-steht-vor-dem-aus/