Bandoneon! (A combine) is a work of the American pianist and experimental composer David Tudor. (“!” means “Factorial”).

This is his second work as a composer and his first full concert work, it was made for a 9 night special event in New York in October 1966 which included a number of various artistic expressions, such as avant-garde dance, music and theater. All with the collaboration of 10 artists (including John Cage) from New York and 30 engineers and scientists from the famous Bell Laboratories, an American industrial research and scientific development company (now owned by Nokia).

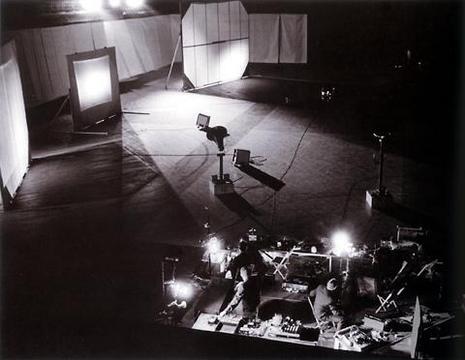

The location was in the 69th Regiment Armory, New York City, and titled “9 Evenings: Theater and Engineering”.

A Bandoneon (a musical instrument, whose origins are linked to Germany more than 150 years ago, but frequently used in Argentine Tango) was used as an input instrument.

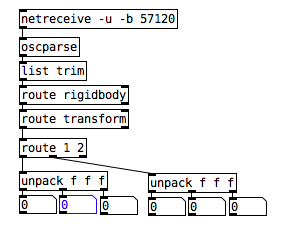

This input was sent into a complex audio and visual modification system. This system was able to move the sound from speaker to speaker (12 in total) and at the same time control lights and video images that animated the entire location.

Luckily this performance was filmed, and a DVD was also released, although it’s not that easy to find.

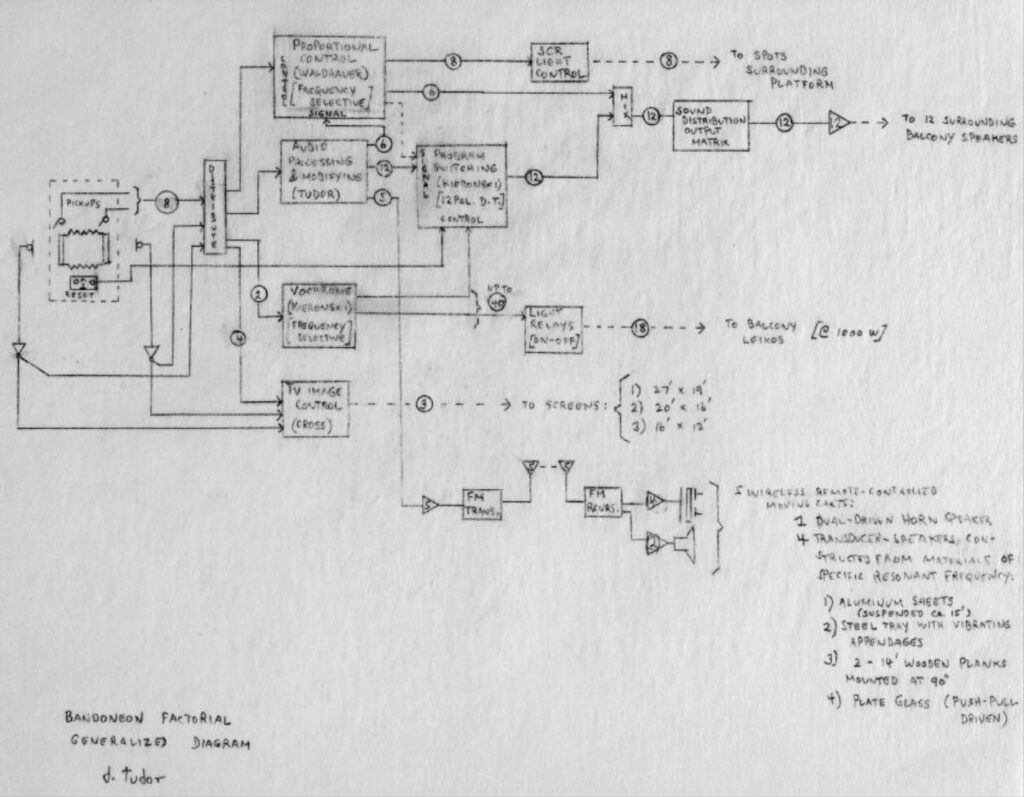

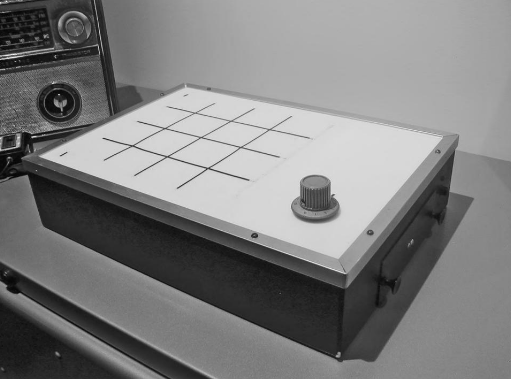

Here is the diagram of the performance:

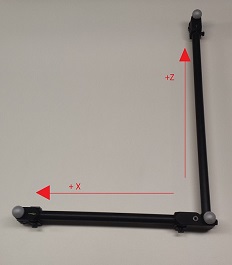

The audio signal is first sent to a single distributor, then transmitted to the Proportional Control System (instrument developed from Fred Waldhauer, used to control the intensity of the sound from 12 balcony loudspeaker surrounding the entire space, and to control the intensity of 8 spotlights surrounding the platform where he sat playing the Bandoneon),

Then to an electronic sound processor built by Tudor [Audio Processing & Modifying], then to the Vochrome (an instrument developed from Robert Kieronski, used to convert a continuous signal intro discrete triggers. It enabled the Bandoneon sound to control the switching of the balcony spotlights via light relays, and the switching of audio signals).

And to the TV Image Control (Developed from Cross).

The Proportional Control System modulates the intensity of light coming from lamps placed around the stage, while the projectors on the balcony are controlled by the Vochrome.

Another interesting thing about this work is that the acoustic space, through the Acoustical Feedback, was used as an oscillator.

Here an excerpt from the performance:

Resources

You Nakai – Reminded by the Instruments: David Tudor’s Music

Wikipedia – David Tudor

Wikipedia – Bandoneon

Clarisse Bardiot – 9 Evenings: Theatre and Engineering

Composer Inside Electronics – David Tudor exhibition

MedienKunstNetz – David Tudor >Bandoneón!<

Museo Nacional Centro de Arte Reina Sofia – 9 Evenings: Theatre & Engineering. Bandoneon! [Bandoneon Factorial] (A Combine)