Part 2: Deep Learning

Neural Networks

Neural networks are a type of machine learning which are inspired by the human brain and consist of many interconnected nodes (neurons). The goal of using neural networks is to train a model, which is a file that is or has been trained by machine learning algorithms to recognize certain properties or patterns. Models are trained with a set of data and once trained they can be used to make predictions about new data. A neural network is split into different layers, to which diffident Neurons belong to. The layers a neural network consists of are an input layer, an output layer and one or more hidden layers in between. Mathematically, a neuron’s output can be described as the following function:

ƒ( wT x + b)

In the function above w is a weight vector, x is a vector of inputs, b is a bias and ƒ is a nonlinear activation function. When a neural network is training, weights w and biases b modify the model to better describe a set of input data (a dataset). Multiple inputs then result in a sum of weighted inputs.

∑i wi xi + b = w1 x1 + w2 x2 + … + wn xn + b

The neurons take a set of such weighted inputs and through an activation function produce new values.

Training

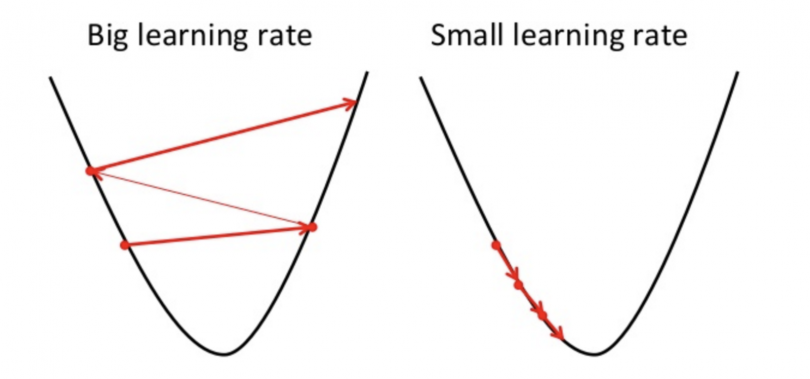

When training the network analyzes the individual packs of data (examples) from the dataset and initializes the weights of its neurons with random values and the bias with zero. Neural network training consists of three parts: A loss function evaluates how well the algorithm models the dataset. The better the predictions are the smaller the output of the loss function becomes. Backpropagation is the process of applying gradients to weights. The output of the loss function is used to calculate the difference between the current value and the desired value. The error is then sent back layer by layer from the output to the input and the neurons’ weights get changed depending on their influence on the error. Gradients are used to adjust the neurons’ weights based on the output of the loss function. This is done by checking how the parameters have to change to minimize loss (= decrease the output of the loss function). To modify the neurons’ weights, the gradients multiplied by a defined factor (the learning rate) are subtracted from the weights. This factor is very small (like 0.001) to ensure weight changes remain small to not jump over the ideal value (close to zero).

Illustration of the learning rate

Layers

As mentioned above, a neural network consists of multiple layers, which are groups of neurons. One or more are hidden layers. These calculate new values for previous values with a specific function (activation function).

Hidden layers can be of various types like linear or dense layers and the type of the layer determines what calculations are made. As the complexity of the problems increases the complexity of the functions must also increase. But stacking multiple linear layers in sequence of each other would be redundant as this could be written out as one function. Dense layers exist for this reason. They can approximate more complex functions which make use of activation functions. Instead of only for the input values, activation functions are applied on each layer which in addition to more complexity leads also to more stable and faster results.

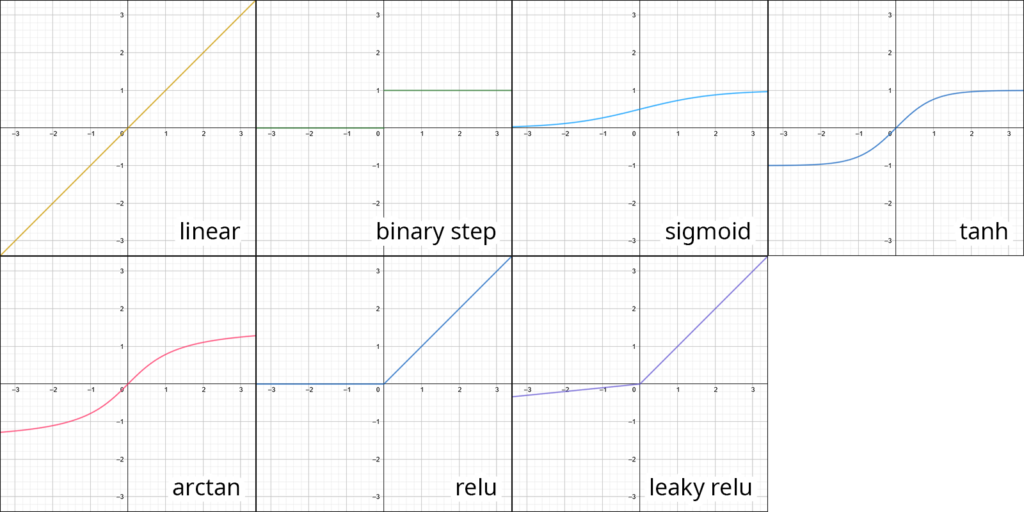

The following list introduces some commonly used activation functions:

- linear (input=output)

- binary step (values are either 0 or 1)

- sigmoid (s-shaped curve with values between 0 and 1 but never 0 and 1)

- tanh (like the sigmoid but maps from -1 to 1)

- arcTan (maps the input to values between -pi/2 to +pi/2)

- reLU – Rectified Linear Unit (sets any negative values to 0)

- leaky reLU (does not completely remove negative values but drastically lowers their magnitude)

Deep Learning

As mentioned before, there are different layers in a neural network. What was not mentioned is that a neural network with more than one hidden layer is called a deep neural network. Thus the process of training a deep neural network’s model is called deep learning. Deep neural networks have a few advantages to neural networks. As mentioned above activation functions introduce non-linearity. And having many dense layers stacked after each other leads to being able to compute much more complex problem’s solutions. Including audio (finally)!

Read more:

http://www.deeplearningbook.org

https://www.techradar.com/news/what-is-a-neural-network

https://www.ibm.com/cloud/learn/neural-networks

https://www.mygreatlearning.com/blog/activation-functions/

https://algorithmia.com/blog/introduction-to-optimizers

https://medium.com/datathings/neural-networks-and-backpropagation-explained-in-a-simple-way-f540a3611f5e

https://medium.com/datathings/dense-layers-explained-in-a-simple-way-62fe1db0ed75

https://medium.com/deeper-learning/glossary-of-deep-learning-batch-normalisation-8266dcd2fa82

https://semanti.ca/blog/?glossary-of-machine-learning-terms